AWS Project: Web App (+IaC, Containerization, & CI/CD Pipelines)

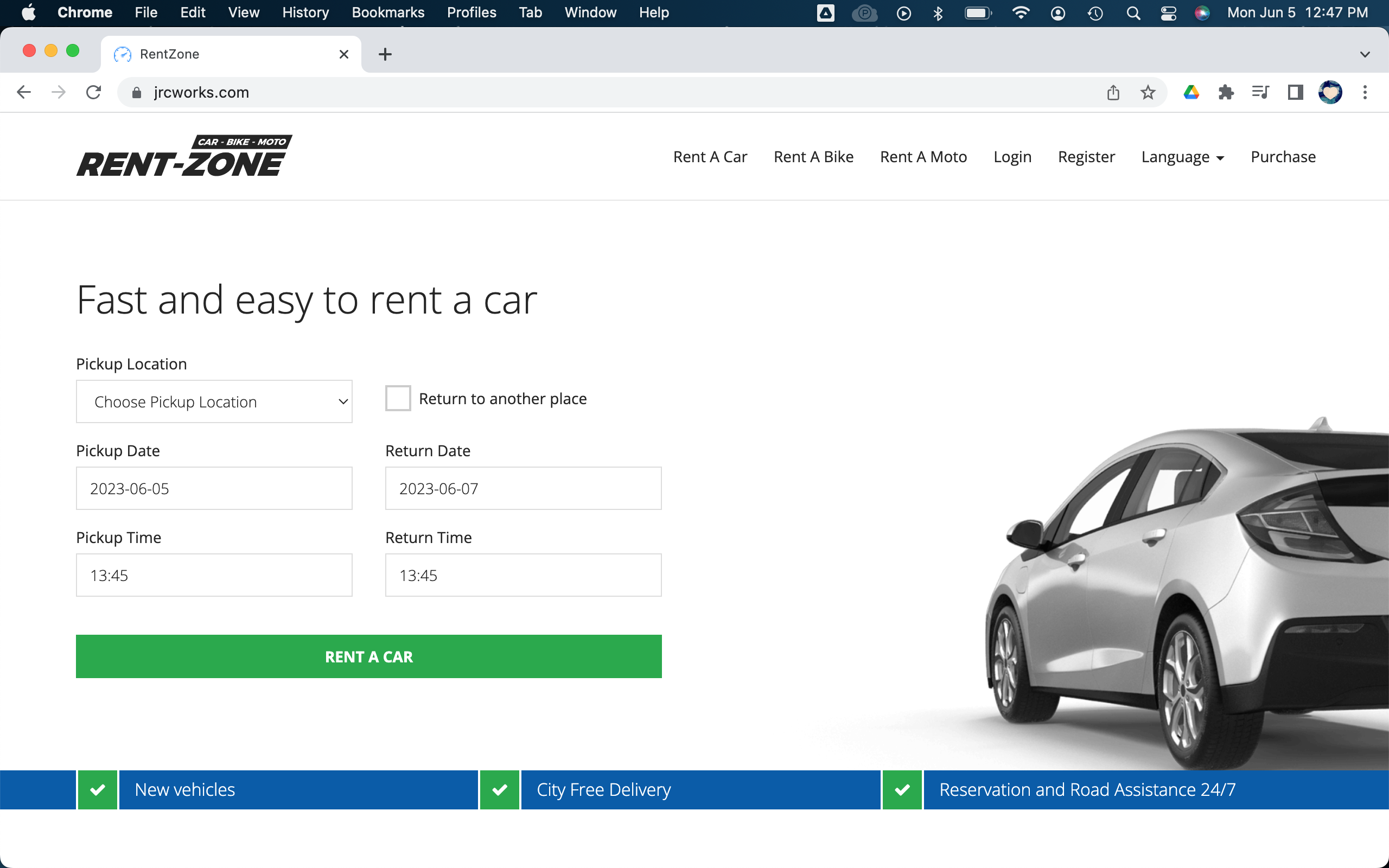

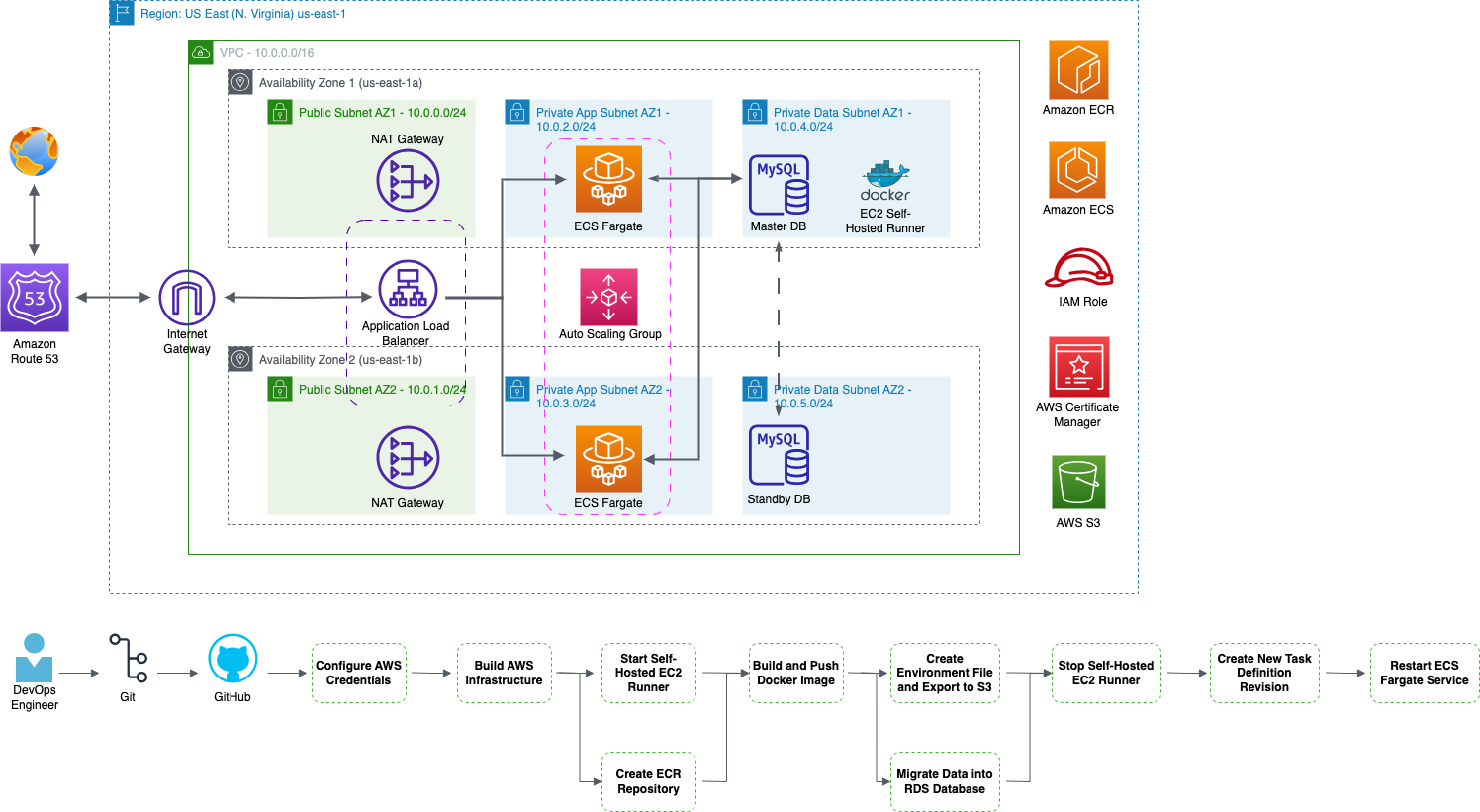

This project covers how to deploy a dynamic web application in AWS, using core AWS services like VPC networks (public and private subnets, NAT gateways, security groups, etc.), RDS databases (MySQL), S3 buckets, application load balancers (ALBs), auto scaling groups (ASGs), ECR and ECS, Route 53, Secrets Manager and more. This project also includes containerization, such as building a Docker image and pushing it to Amazon ECR. It also includes deploying an application in AWS with infrastucture as code (IaC) using Terraform. Finally, this project covers continuous integration and continuous delivery (CI/CD) pipelines using GitHub Actions. Project provided by AOSNote.

Project Code on GitHub

Step 1: Getting Set Up

This project requires some installations and tools, so to begin, we will set up some things on our local computer.

1. Install Terraform.

2. Sign up for a GitHub account.

3. Install Git.

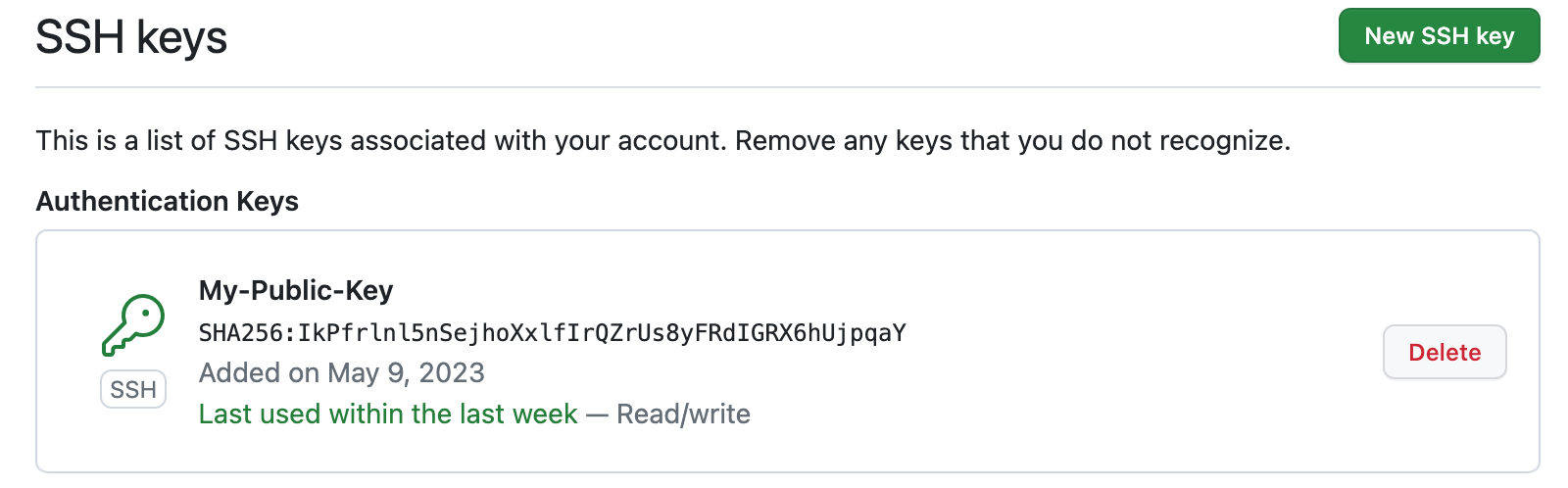

4. Generate a key pair for secure connections (Mac). This is so we can clone our GitHub repository.

ssh-keygen -b 4096 -t rsa

5. Add public SSH key to GitHub.

7. Install Terraform extensions (Terraform and Hashicorp Terraform).

8. Install AWS CLI.

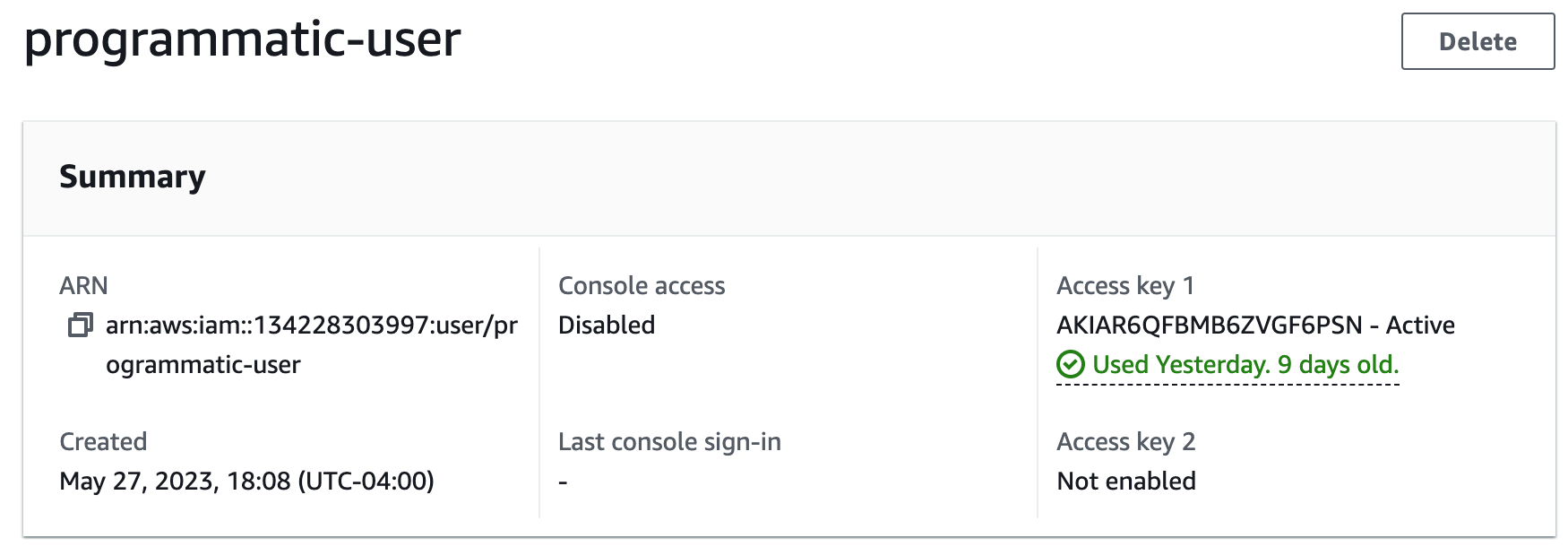

9. Create an IAM user in AWS.

10. Generate an access key for the IAM user. This is so the user can have programmatic access to the AWS account.

11. Create a profile. We will do this by running the AWS configure command and providing the access key credentials we just created.

aws configure

Step 2: Create the GitHub Repository

GitHub repositories are storage spaces where we can store and manage our code, collaborate with others, and track changes using Git version control. Each repository contains files, branches, and commit history.1. Create the repository.

git clone < ssh clone url >

3. Open the cloned repository in VS Code, and then update the .gitignore file so that the .tfvars extensions are removed from this file. This is so that GitHub will commit our .tfvars file into our repository, which we need it to do. Otherwise it will break our pipeline as we build it.

Step 3: Add Terraform Code

Terraform allows us to define and manage our infrastructure as code (IaC), automating the creation, modification, and destruction of resources across different cloud providers and environments.With this code we will build our:

- VPC

- Internet Gateways

- Public Subnets

- Private Subnets

- Route Tables

- NAT Gateways

- Security Groups

- RDS Database

- Application Load Balancer

- S3 Bucket

- ECS Fargate Service

- Auto Scaling Group

- Route 53 Record Set

- AWS Certificate

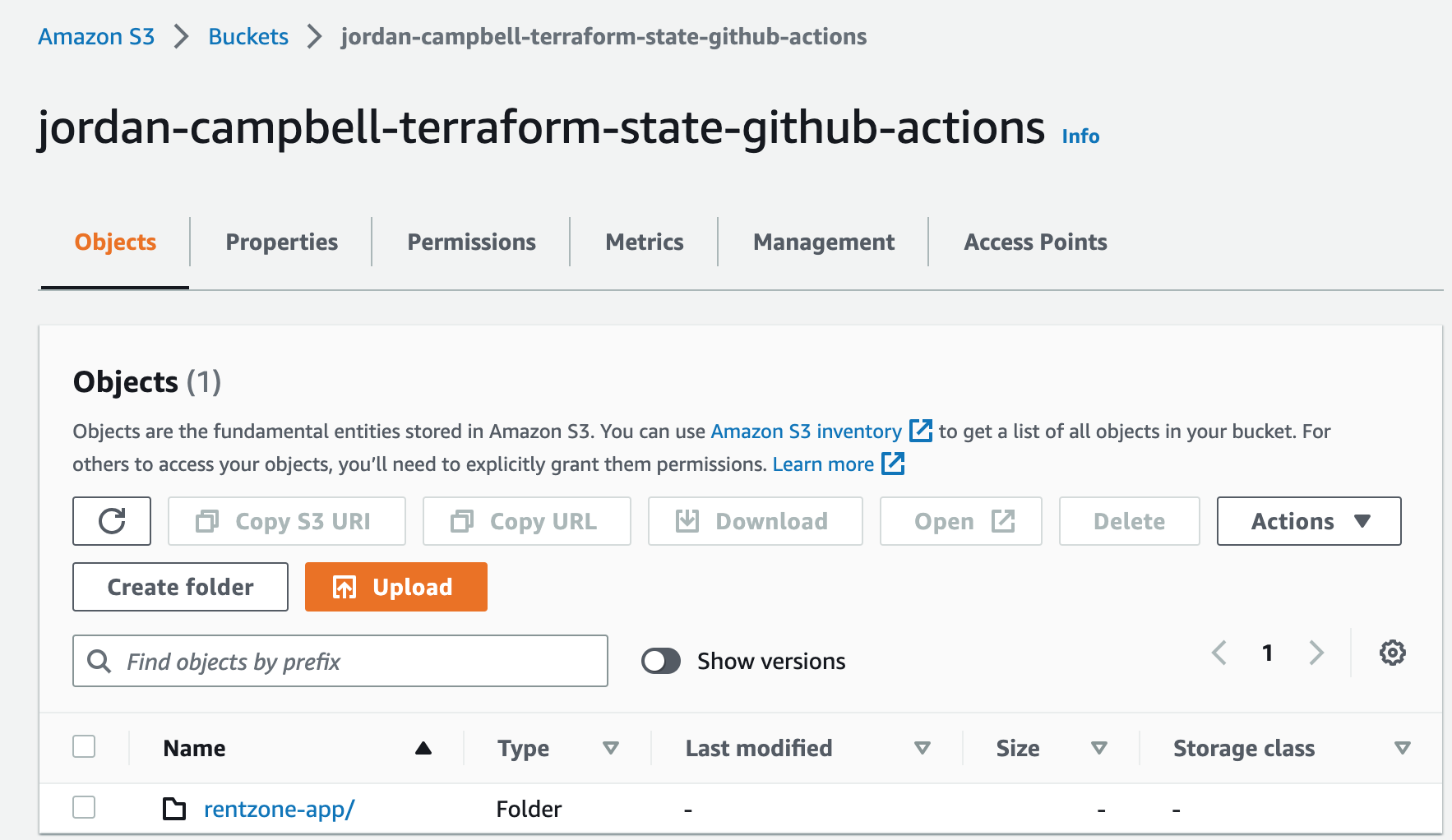

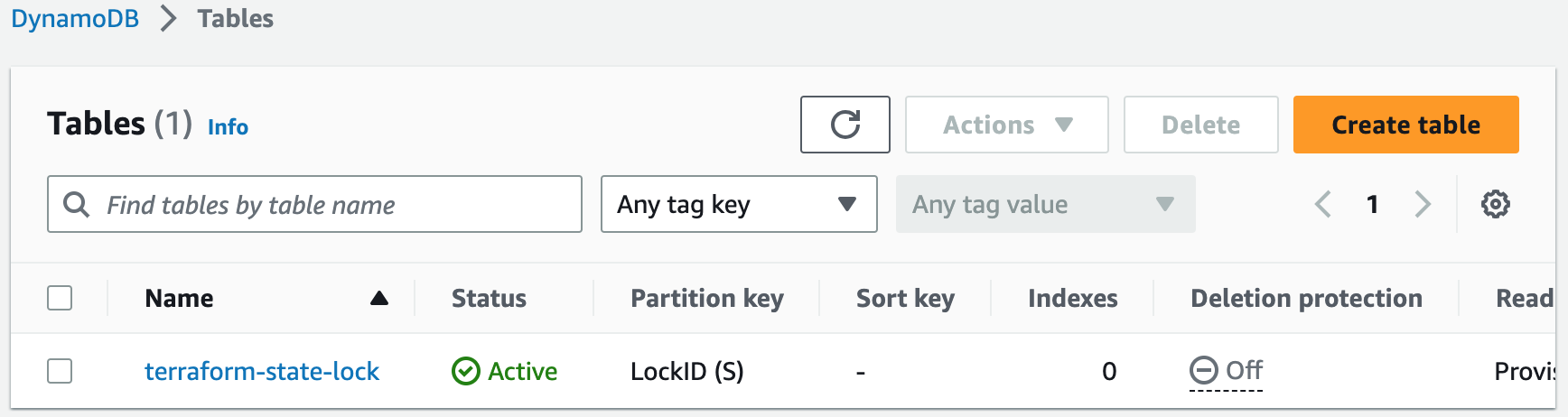

Step 4: Create the Terraform Backend

The AWS backend in Terraform is used to store the state file remotely. It can be configured with either Amazon S3 or Amazon DynamoDB or both. S3 provides durable storage, while DynamoDB acts as a locking mechanism to prevent concurrent modifications.1. Create an S3 bucket to store the Terraform state. It will be used to track and manage changes to our infrastructure.

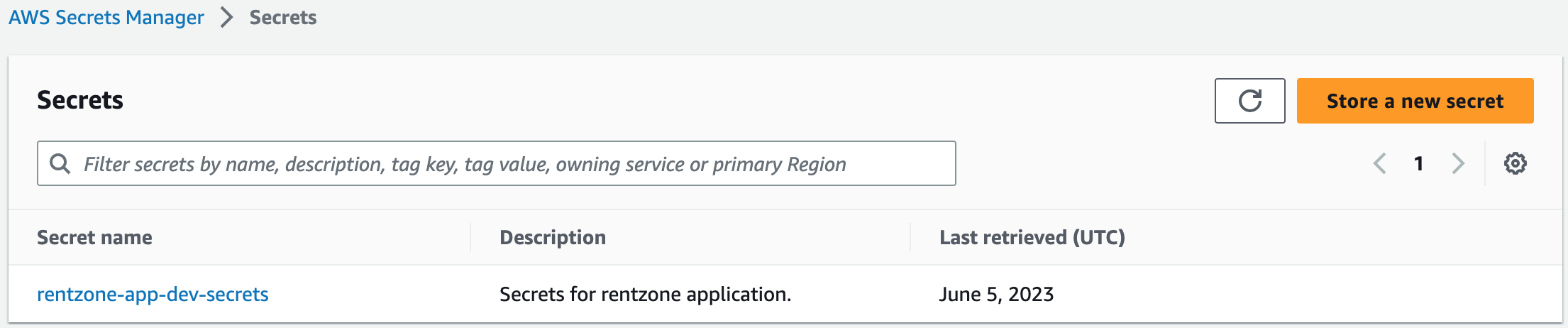

Step 5: Create Secrets in AWS Secrets Manager

AWS Secrets Manager is a service that will help us securely store and manage sensitive information such database credentials and other secrets used by our applications.1. Go to AWS Secrets Manager and choose store a new secret. Our Secrets Keys are as follows:

2. Add our values and store the secrets.

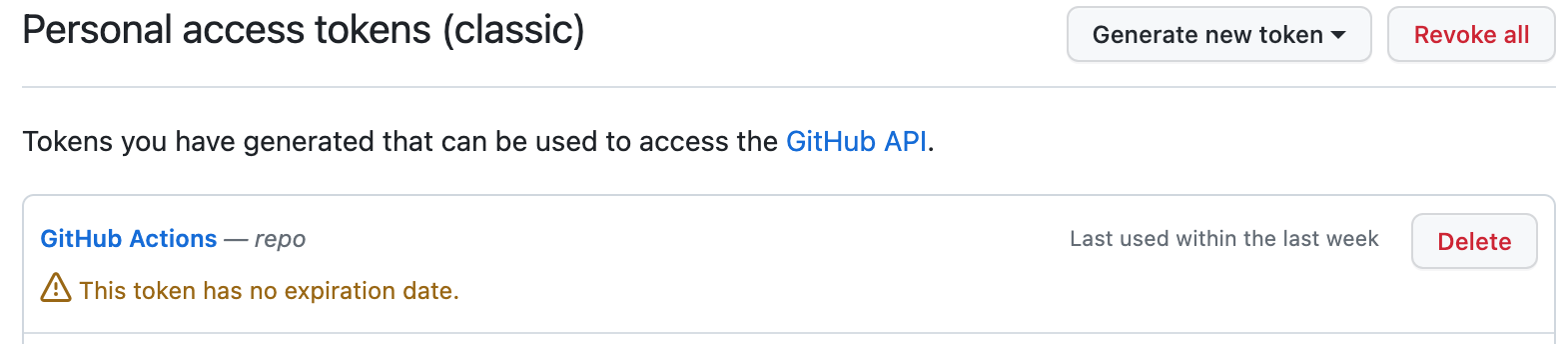

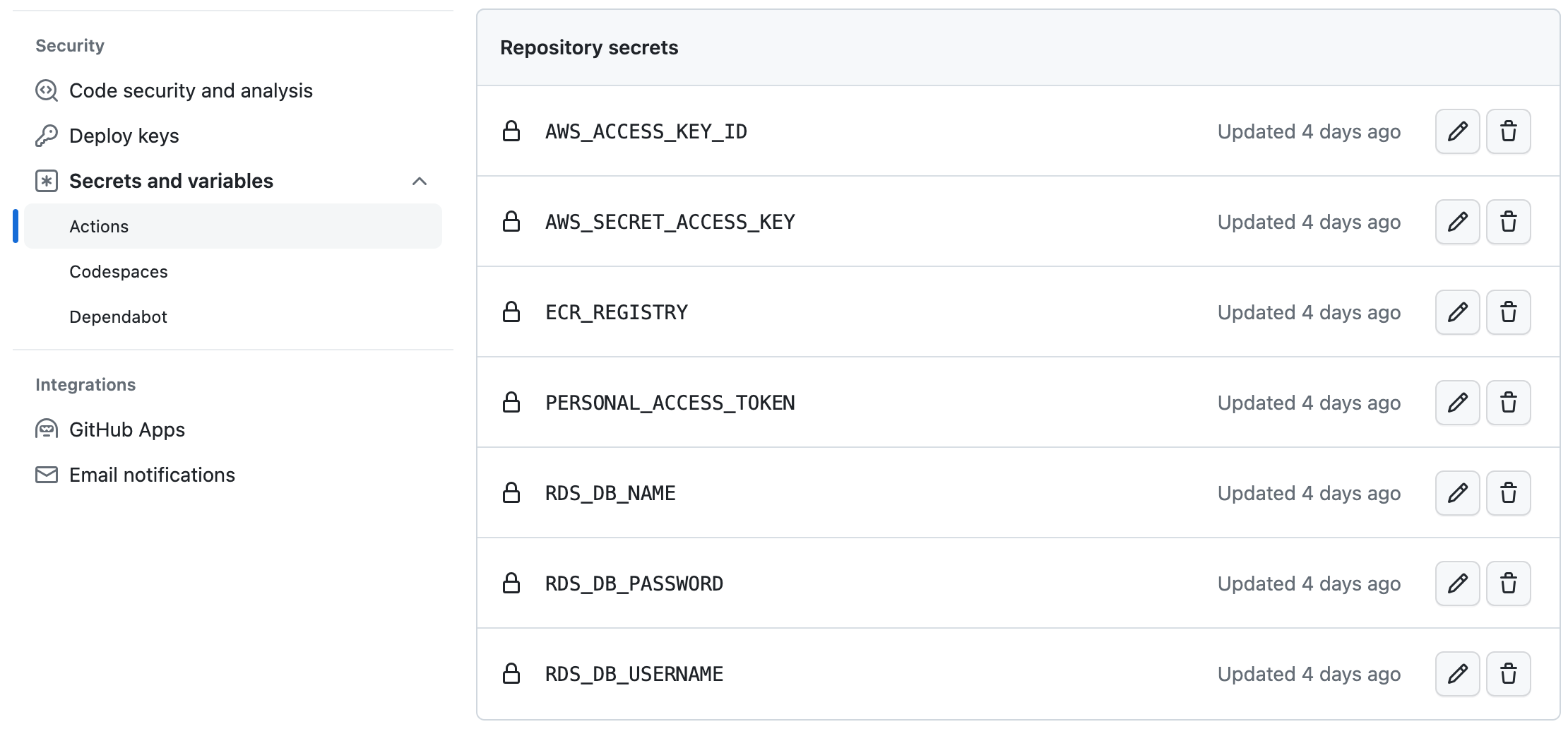

Step 6: Create GitHub Personal Access Token and Repository Secrets

1. Create GitHub personal access token. Docker will use this to clone our application's code repository when we build our Docker image.

Step 7: Create GitHub Actions Workflow File

Workflow files are configuration files used in CI/CD systems to automate steps and actions for building, testing, and deploying software when specific events occur. They streamline the software development process by defining a predefined workflow and automating tasks.A workflow is a configurable automated process made up of one or more jobs. We must create a YAML file to define our workflow configuration.

1. Create the workflow file. It must be put in a folder called:

.github/workflows

2. We will then call the file:deploy-pipeline.yml

This is the workflow file we will use to build our CI/CD pipeline.

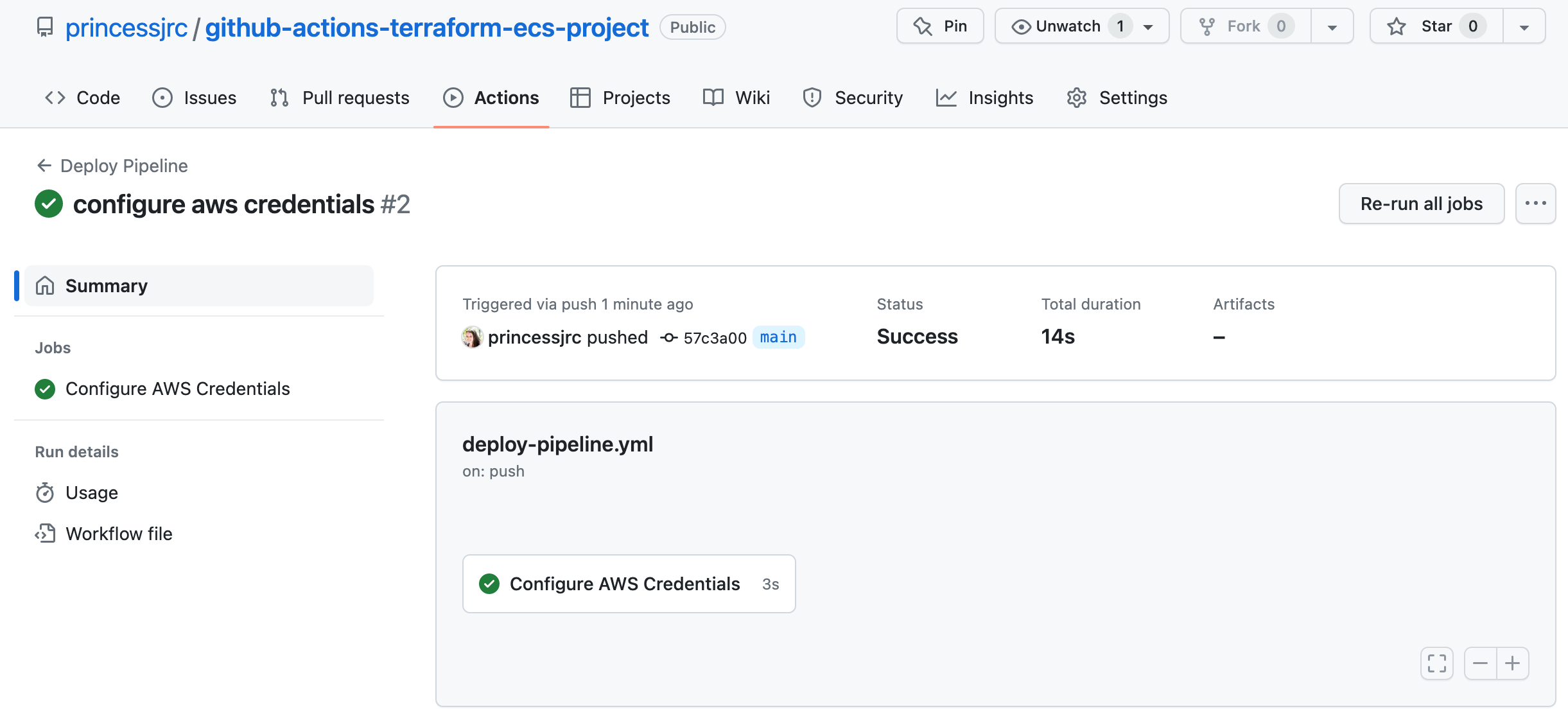

Step 8: Create a GitHub Actions Job to Configure AWS Credentials

The first job in our pipeline will be responsible for configuring our IAM credentials to verify our access to AWS and authorize our GitHub Actions job to create new resources in our AWS account.

name: Deploy Pipeline

on:

push:

branches: [main]

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_REGION: us-east-1

GITHUB_USERNAME: princessjrc

REPOSITORY_NAME: application-codes

WEB_FILE_ZIP: rentzone.zip

WEB_FILE_UNZIP: rentzone

FLYWAY_VERSION: 9.8.1

TERRAFORM_ACTION: apply

jobs:

# Configure AWS credentials

configure_aws_credentials:

name: Configure AWS Credentials

runs-on: ubuntu-latest

steps:

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1-node16

with:

aws-access-key-id: ${{ env.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ env.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

aws-actions/configure-aws-credentials -- Configure your AWS credentials and region environment variables for use in other GitHub Actions.

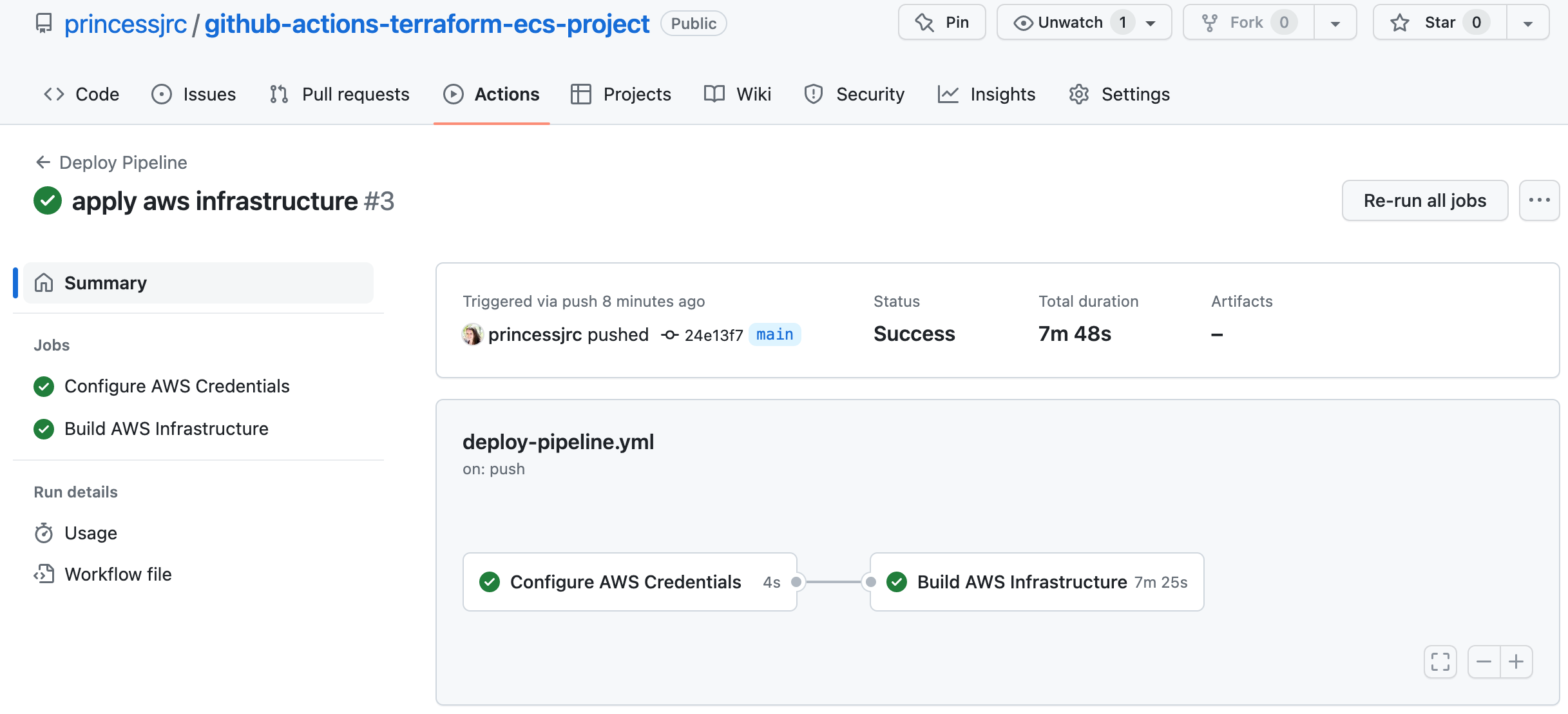

Step 9: Create a GitHub Actions Job to Deploy AWS Infrastructure

In this job, we will use Terraform and the ubuntu-hosted GitHub runner to build our infrastucture in AWS.A GitHub runner is a software component that executes automated tasks and actions defined in GitHub workflows. This runner will run on GitHub-hosted resources, enabling us to build, test, and deploy software within the GitHub Actions CI/CD platform.

# Build AWS infrastructure

deploy_aws_infrastructure:

name: Build AWS Infrastructure

needs: configure_aws_credentials

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Set up Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.1.7

- name: Run Terraform initialize

working-directory: ./iac

run: terraform init

- name: Run Terraform apply/destroy

working-directory: ./iac

run: terraform ${{ env.TERRAFORM_ACTION }} -auto-approve

- name: Get Terraform output image name

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

IMAGE_NAME_VALUE=$(terraform output -raw image_name | grep -Eo "^[^:]+" | tail -n 1)

echo "IMAGE_NAME=$IMAGE_NAME_VALUE" >> $GITHUB_ENV

- name: Get Terraform output domain name

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

DOMAIN_NAME_VALUE=$(terraform output -raw domain_name | grep -Eo "^[^:]+" | tail -n 1)

echo "DOMAIN_NAME=$DOMAIN_NAME_VALUE" >> $GITHUB_ENV

- name: Get Terraform output RDS endpoint

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

RDS_ENDPOINT_VALUE=$(terraform output -raw rds_endpoint | grep -Eo "^[^:]+" | tail -n 1)

echo "RDS_ENDPOINT=$RDS_ENDPOINT_VALUE" >> $GITHUB_ENV

- name: Get Terraform output image tag

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

IMAGE_TAG_VALUE=$(terraform output -raw image_tag | grep -Eo "^[^:]+" | tail -n 1)

echo "IMAGE_TAG=$IMAGE_TAG_VALUE" >> $GITHUB_ENV

- name: Get Terraform output private data subnet az1 id

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

PRIVATE_DATA_SUBNET_AZ1_ID_VALUE=$(terraform output -raw private_data_subnet_az1_id | grep -Eo "^[^:]+" | tail -n 1)

echo "PRIVATE_DATA_SUBNET_AZ1_ID=$PRIVATE_DATA_SUBNET_AZ1_ID_VALUE" >> $GITHUB_ENV

- name: Get Terraform output runner security group id

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

RUNNER_SECURITY_GROUP_ID_VALUE=$(terraform output -raw runner_security_group_id | grep -Eo "^[^:]+" | tail -n 1)

echo "RUNNER_SECURITY_GROUP_ID=$RUNNER_SECURITY_GROUP_ID_VALUE" >> $GITHUB_ENV

- name: Get Terraform output task definition name

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

TASK_DEFINITION_NAME_VALUE=$(terraform output -raw task_definition_name | grep -Eo "^[^:]+" | tail -n 1)

echo "TASK_DEFINITION_NAME=$TASK_DEFINITION_NAME_VALUE" >> $GITHUB_ENV

- name: Get Terraform output ecs cluster name

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

ECS_CLUSTER_NAME_VALUE=$(terraform output -raw ecs_cluster_name | grep -Eo "^[^:]+" | tail -n 1)

echo "ECS_CLUSTER_NAME=$ECS_CLUSTER_NAME_VALUE" >> $GITHUB_ENV

- name: Get Terraform output ecs service name

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

ECS_SERVICE_NAME_VALUE=$(terraform output -raw ecs_service_name | grep -Eo "^[^:]+" | tail -n 1)

echo "ECS_SERVICE_NAME=$ECS_SERVICE_NAME_VALUE" >> $GITHUB_ENV

- name: Get Terraform output environment file name

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

ENVIRONMENT_FILE_NAME_VALUE=$(terraform output -raw environment_file_name | grep -Eo "^[^:]+" | tail -n 1)

echo "ENVIRONMENT_FILE_NAME=$ENVIRONMENT_FILE_NAME_VALUE" >> $GITHUB_ENV

- name: Get Terraform output env file bucket name

if: env.TERRAFORM_ACTION == 'apply'

working-directory: ./iac

run: |

ENV_FILE_BUCKET_NAME_VALUE=$(terraform output -raw env_file_bucket_name | grep -Eo "^[^:]+" | tail -n 1)

echo "ENV_FILE_BUCKET_NAME=$ENV_FILE_BUCKET_NAME_VALUE" >> $GITHUB_ENV

- name: Print GITHUB_ENV contents

run: cat $GITHUB_ENV

outputs:

terraform_action: ${{ env.TERRAFORM_ACTION }}

image_name: ${{ env.IMAGE_NAME }}

domain_name: ${{ env.DOMAIN_NAME }}

rds_endpoint: ${{ env.RDS_ENDPOINT }}

image_tag: ${{ env.IMAGE_TAG }}

private_data_subnet_az1_id: ${{ env.PRIVATE_DATA_SUBNET_AZ1_ID }}

runner_security_group_id: ${{ env.RUNNER_SECURITY_GROUP_ID }}

task_definition_name: ${{ env.TASK_DEFINITION_NAME }}

ecs_cluster_name: ${{ env.ECS_CLUSTER_NAME }}

ecs_service_name: ${{ env.ECS_SERVICE_NAME }}

environment_file_name: ${{ env.ENVIRONMENT_FILE_NAME }}

env_file_bucket_name: ${{ env.ENV_FILE_BUCKET_NAME }}

actions/checkout -- This action checks-out your repository under $GITHUB_WORKSPACE, so your workflow can access it.

hashicorp/setup-terraform -- The hashicorp/setup-terraform action is a JavaScript action that sets up Terraform CLI in your GitHub Actions workflow.

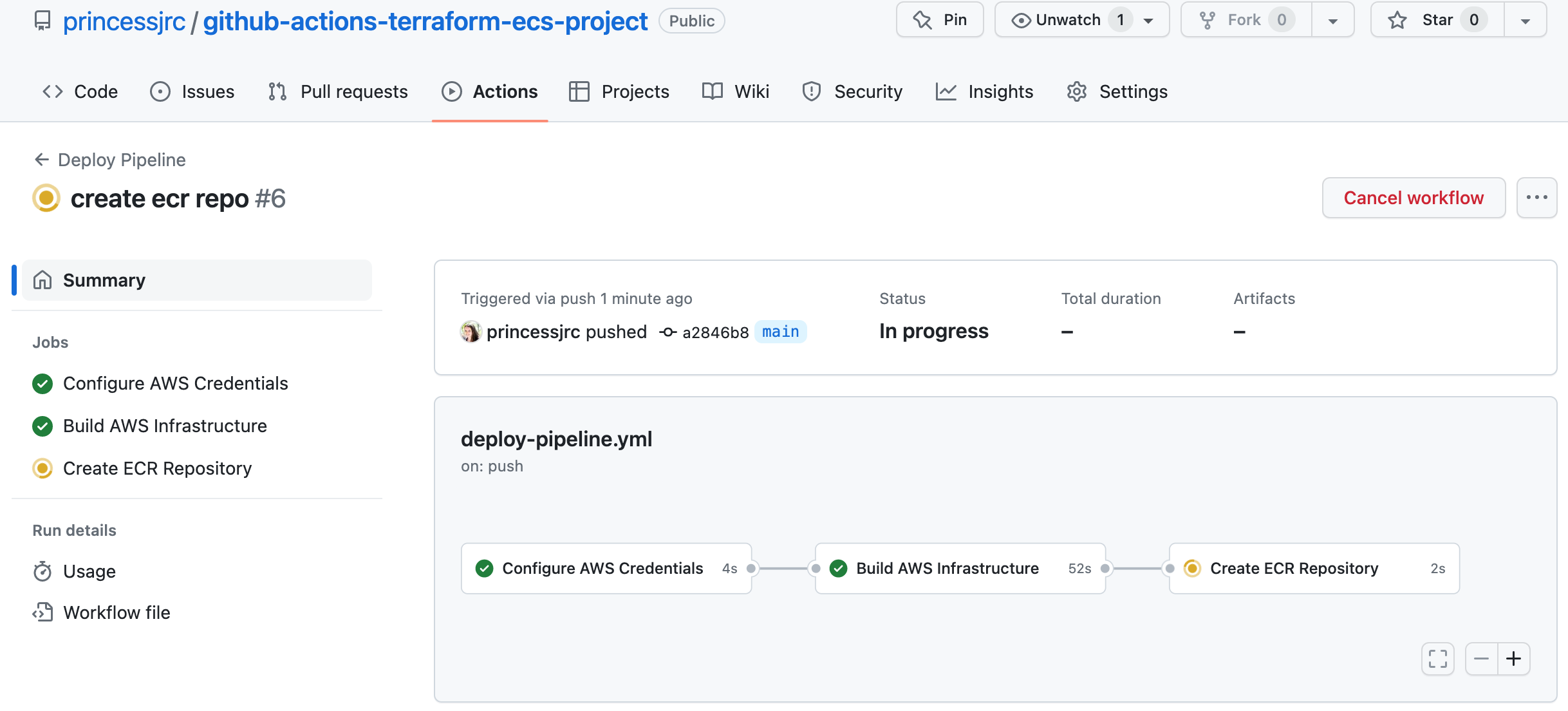

Step 10: Create a GitHub Actions Job to Create an Amazon ECR Repository

In this job, we will create a repository in Amazon ECR which we will use to store our Docker image.

# Create ECR repository

create_ecr_repository:

name: Create ECR Repository

needs:

- configure_aws_credentials

- deploy_aws_infrastructure

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy'

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Check if ECR repository exists

env:

IMAGE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.image_name }}

run: |

result=$(aws ecr describe-repositories --repository-names "${{ env.IMAGE_NAME }}" | jq -r '.repositories[0].repositoryName')

echo "repo_name=$result" >> $GITHUB_ENV

continue-on-error: true

- name: Create ECR repository

env:

IMAGE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.image_name }}

if: env.repo_name != env.IMAGE_NAME

run: |

aws ecr create-repository --repository-name ${{ env.IMAGE_NAME }}

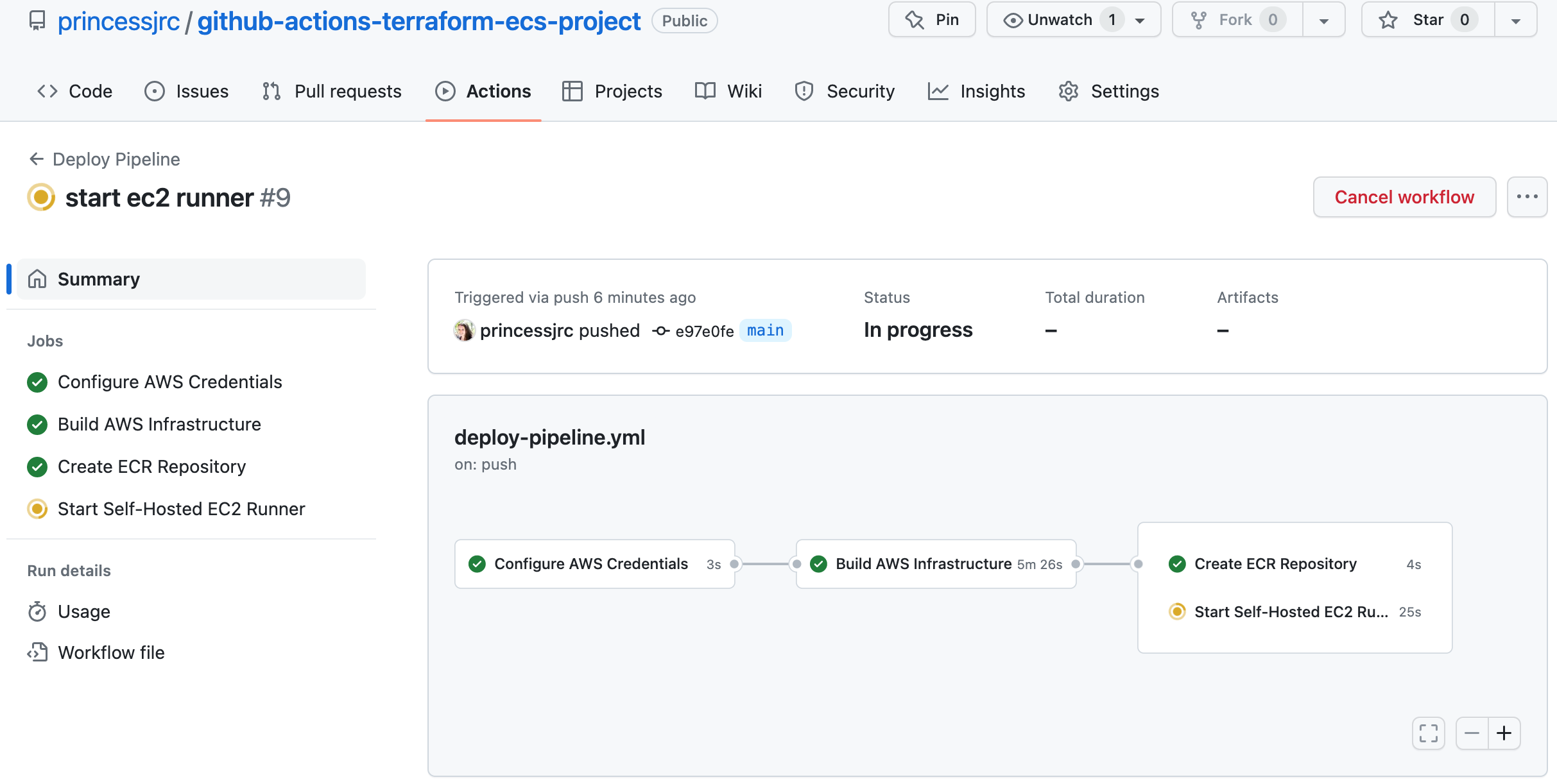

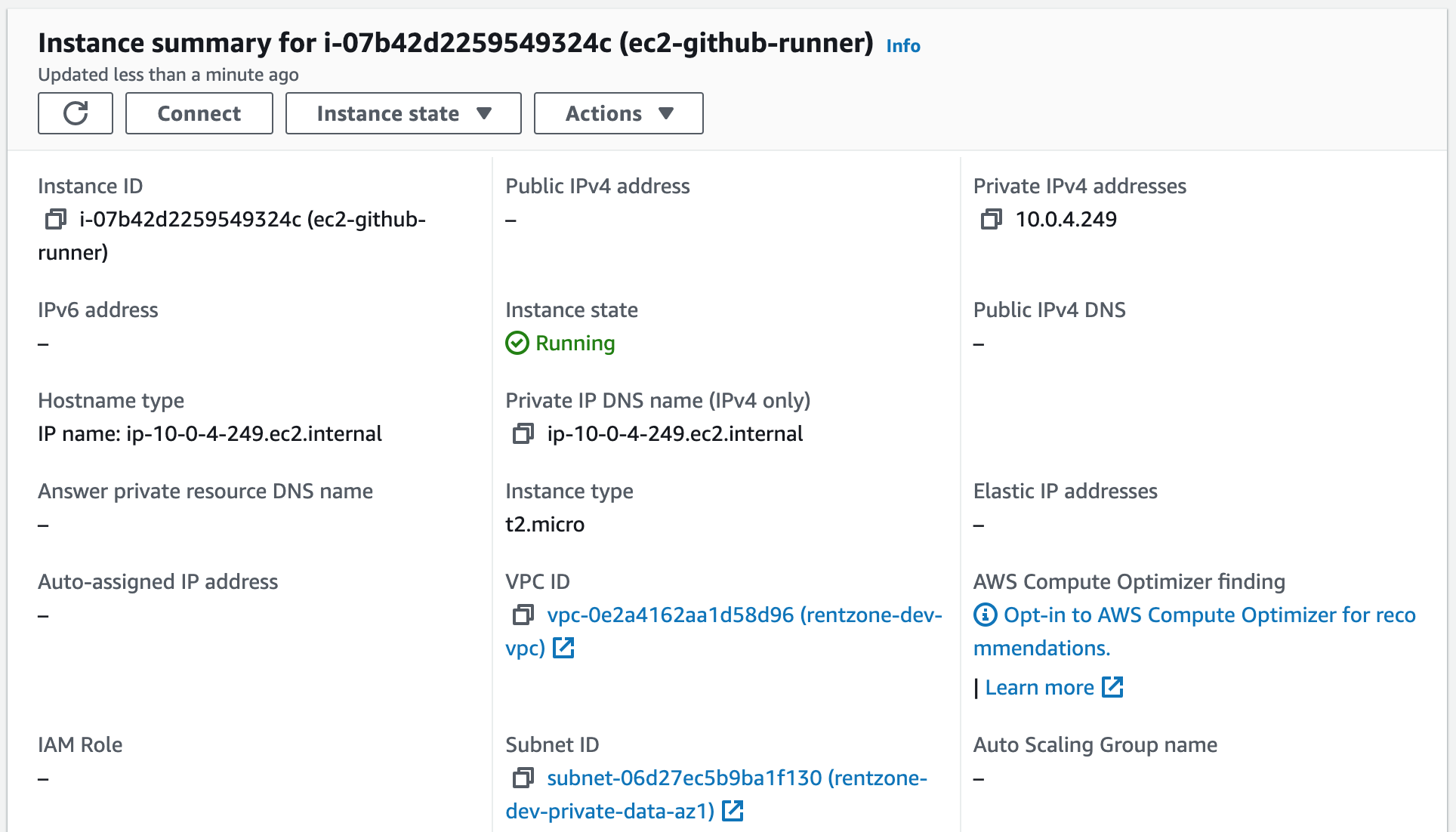

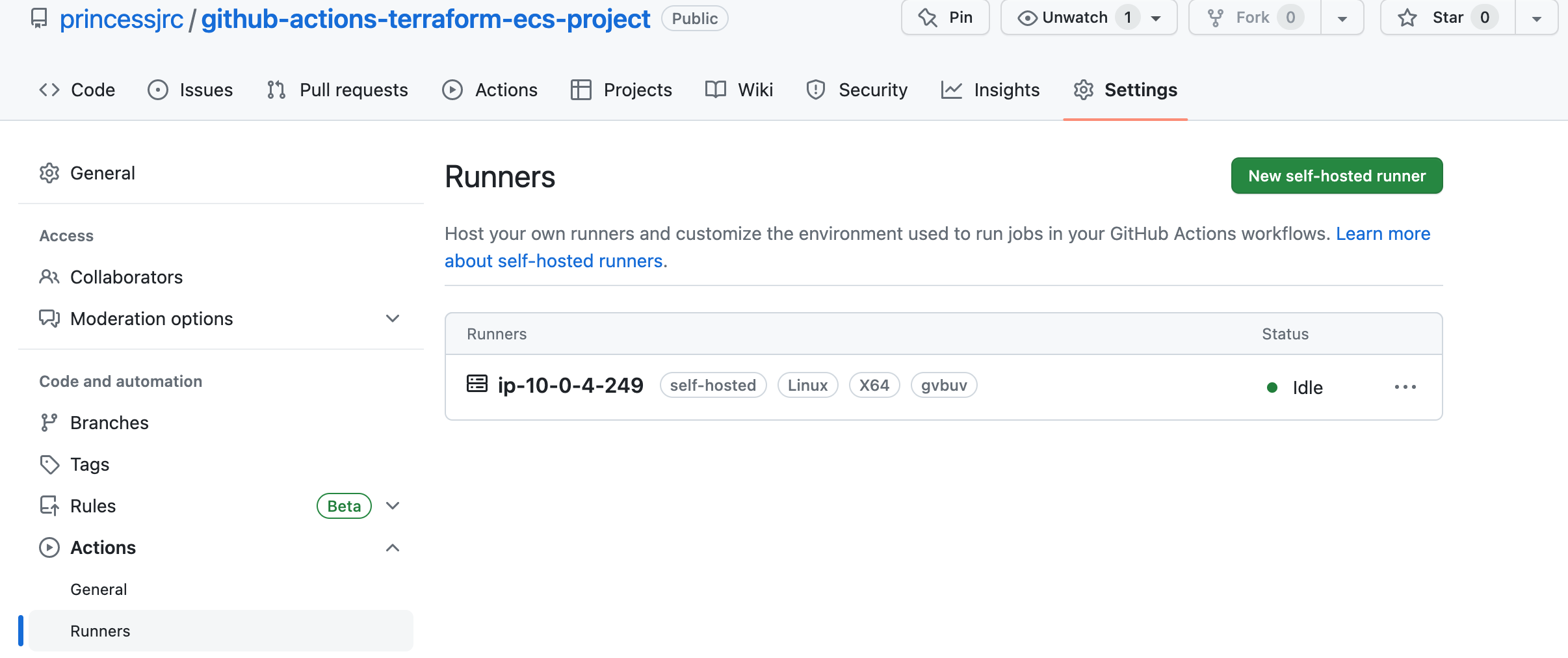

Step 11: Create a GitHub Actions Job to Start a Self-Hosted Runner

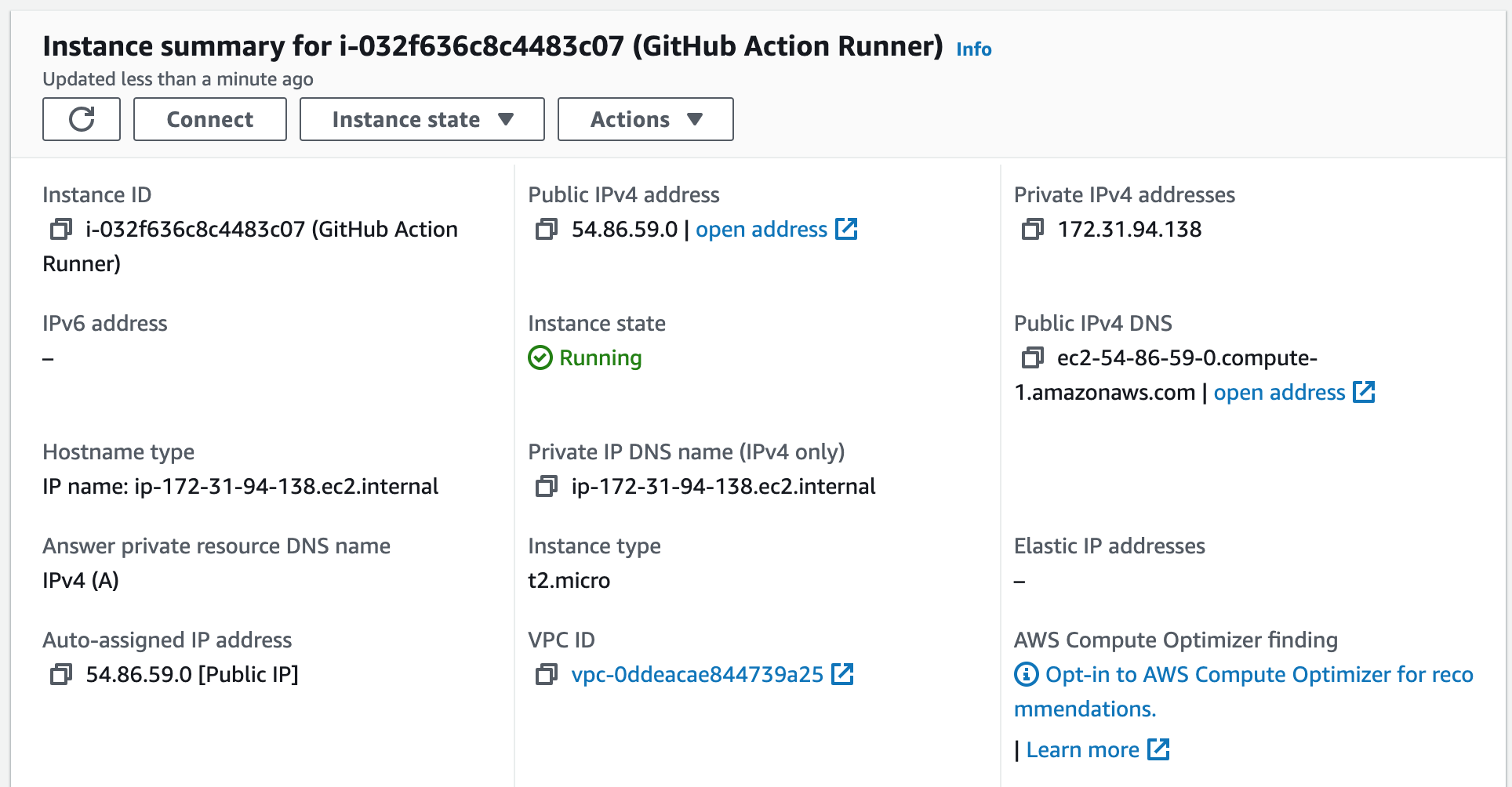

A self-hosted runner is a user-managed GitHub runner that runs on our own infrastructure. It executes workflows and actions defined in GitHub Actions, providing flexibility and control over the execution environment.We are going to start a self-hosted EC2 runner in our private data subnet, first to build our Docker image and push the image to the Amazon ECR repository we just created, and second to run our database migration with Flyway.

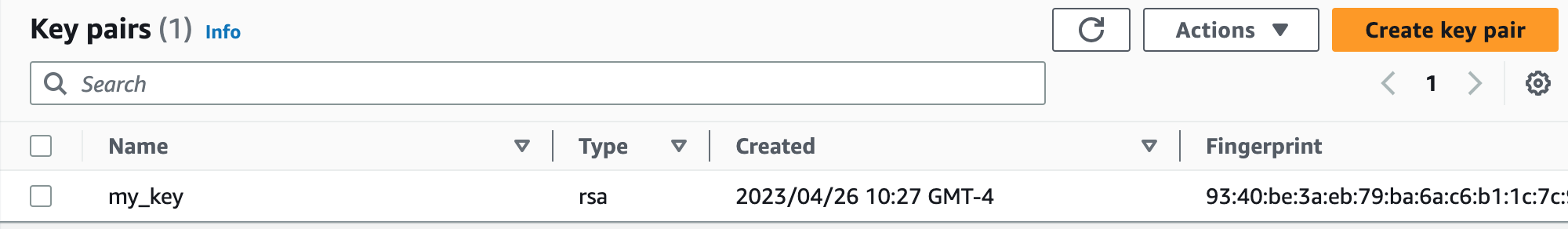

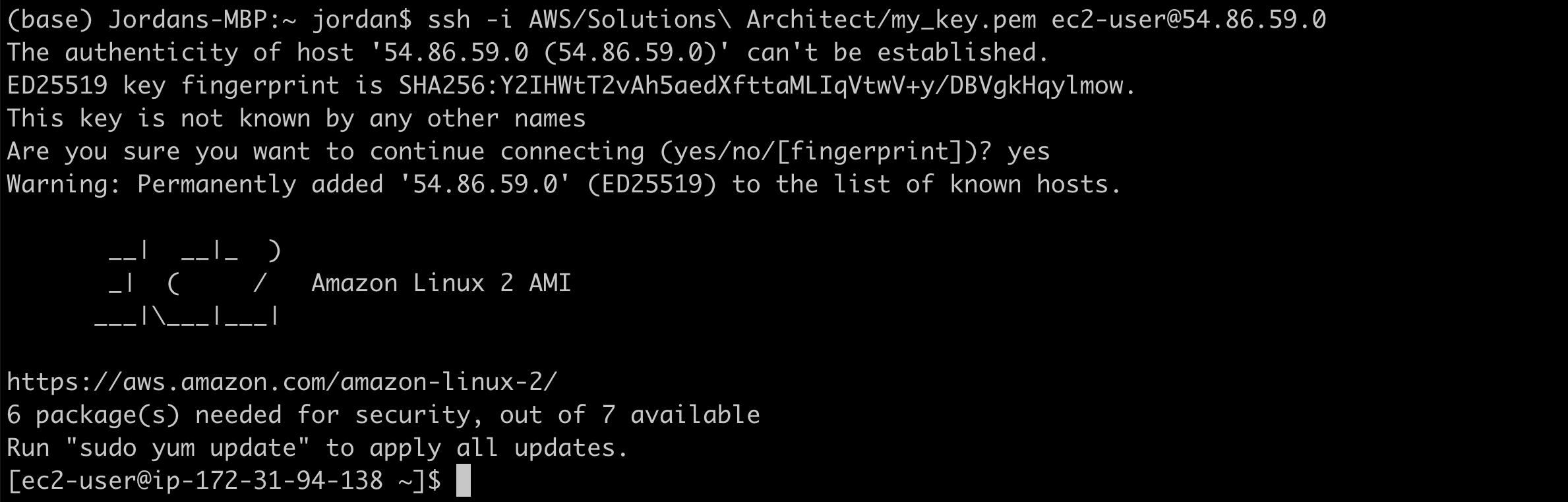

1. Create a key pair. We will use this to SSH into our EC2 instance.

ssh -i < key pair > ec2-user@< public-ip address >

sudo yum update -y && \

sudo yum install docker -y && \

sudo yum install git -y && \

sudo yum install libicu -y && \

sudo systemctl enable docker

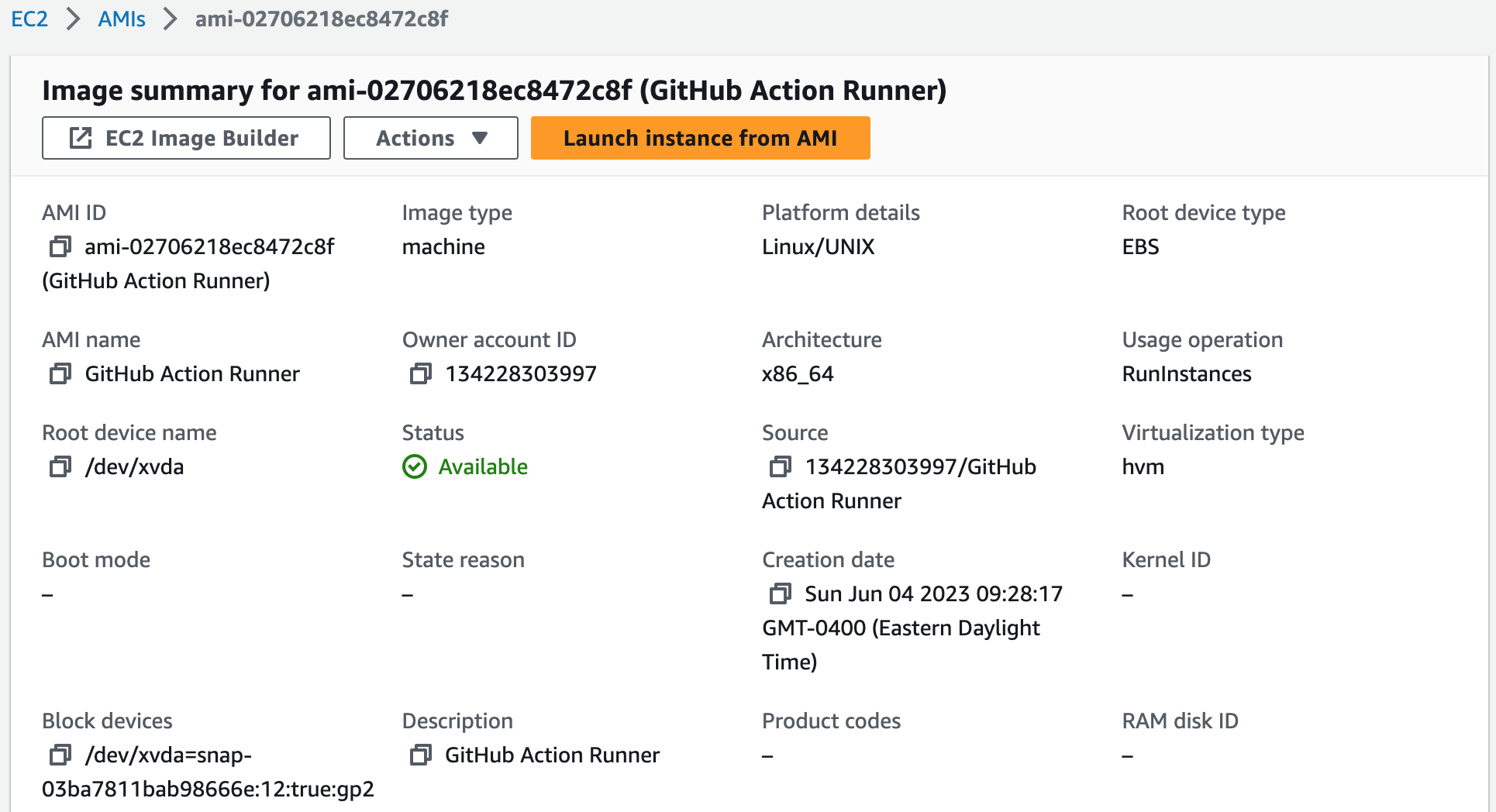

5. Create an AMI. This is so our GitHub Actions job can use this AMI to start our self-hosted runner.

# Start self-hosted EC2 runner

start_runner:

name: Start Self-Hosted EC2 Runner

needs:

- configure_aws_credentials

- deploy_aws_infrastructure

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy'

runs-on: ubuntu-latest

steps:

- name: Check for running EC2 runner

run: |

instances=$(aws ec2 describe-instances --filters "Name=tag:Name,Values=ec2-github-runner" "Name=instance-state-name,Values=running" --query 'Reservations[].Instances[].InstanceId' --output text)

if [ -n "$instances" ]; then

echo "runner-running=true" >> $GITHUB_ENV

else

echo "runner-running=false" >> $GITHUB_ENV

fi

- name: Start EC2 runner

if: env.runner-running != 'true'

id: start-ec2-runner

uses: machulav/ec2-github-runner@v2

with:

mode: start

github-token: ${{ secrets.PERSONAL_ACCESS_TOKEN }}

ec2-image-id: ami-02706218ec8472c8f

ec2-instance-type: t2.micro

subnet-id: ${{ needs.deploy_aws_infrastructure.outputs.private_data_subnet_az1_id }}

security-group-id: ${{ needs.deploy_aws_infrastructure.outputs.runner_security_group_id }}

aws-resource-tags: >

[

{"Key": "Name", "Value": "ec2-github-runner"},

{"Key": "GitHubRepository", "Value": "${{ github.repository }}"}

]

outputs:

label: ${{ steps.start-ec2-runner.outputs.label }}

ec2-instance-id: ${{ steps.start-ec2-runner.outputs.ec2-instance-id }}

machulav/ec2-github-runner -- Start your EC2 self-hosted runner right before you need it. Run the job on it. Finally, stop it when you finish. And all this automatically as a part of your GitHub Actions workflow.

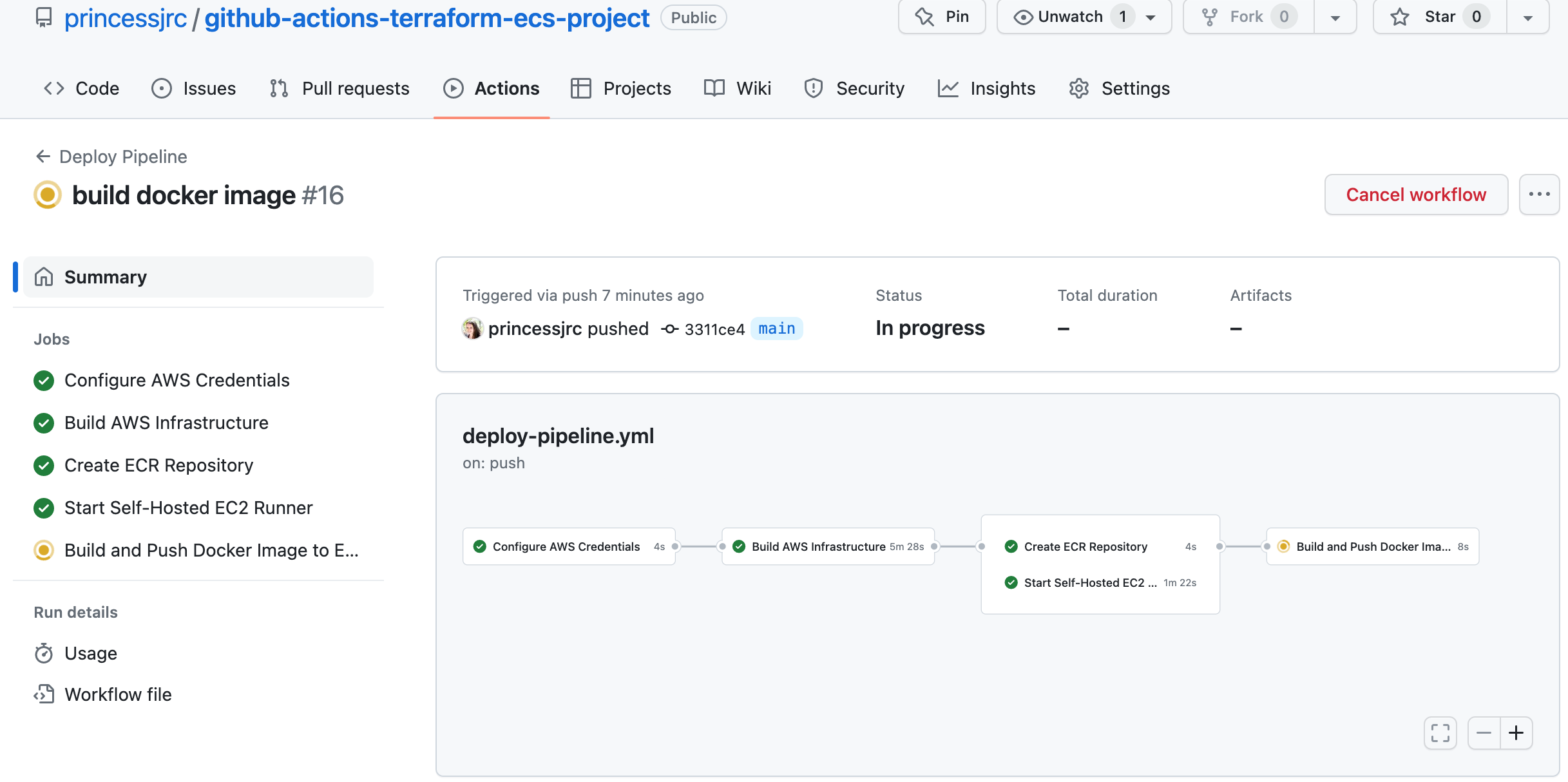

Step 12: Create a GitHub Actions Job to Build and Push a Docker Image to Amazon ECR

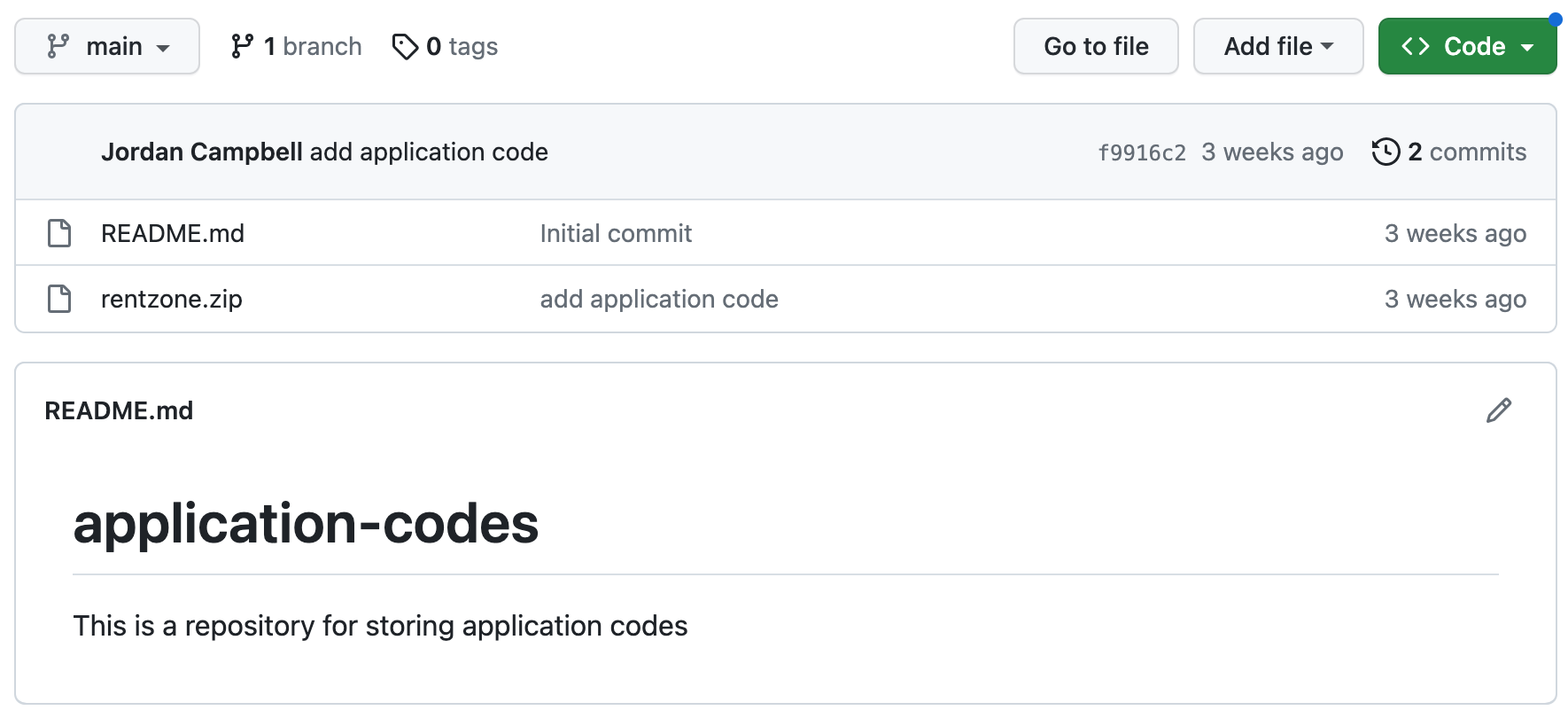

We will build the Docker image for our application and push it to the Amazon ECR repository we created.1. Set up a GitHub repository to store our application's code.

git clone < ssh clone url >

3. Add our code to the local repository and push it back to GitHub.

In summary, this Dockerfile sets up an environment with Apache, PHP, MySQL, and other dependencies needed to run a web application. It clones our GitHub repository, extracts the application code, modifies the configuration, and sets permissions before starting Apache as the entrypoint for the container.

5. Create the AppServiceProvider.php file. Our application will use this file to redirect HTTP traffic to HTTPS.

6. Now we will create the job to build and push our Docker image into Amazon ECR.

# Build and push Docker image to ECR

build_and_push_image:

name: Build and Push Docker Image to ECR

needs:

- configure_aws_credentials

- deploy_aws_infrastructure

- create_ecr_repository

- start_runner

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy'

runs-on: self-hosted

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Login to Amazon ECR

uses: aws-actions/amazon-ecr-login@v1

- name: Build Docker image

env:

DOMAIN_NAME: ${{ needs.deploy_aws_infrastructure.outputs.domain_name }}

RDS_ENDPOINT: ${{ needs.deploy_aws_infrastructure.outputs.rds_endpoint }}

IMAGE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.image_name }}

IMAGE_TAG: ${{ needs.deploy_aws_infrastructure.outputs.image_tag }}

run: |

docker build \

--build-arg PERSONAL_ACCESS_TOKEN=${{ secrets.PERSONAL_ACCESS_TOKEN }} \

--build-arg GITHUB_USERNAME=${{ env.GITHUB_USERNAME }} \

--build-arg REPOSITORY_NAME=${{ env.REPOSITORY_NAME }} \

--build-arg WEB_FILE_ZIP=${{ env.WEB_FILE_ZIP }} \

--build-arg WEB_FILE_UNZIP=${{ env.WEB_FILE_UNZIP }} \

--build-arg DOMAIN_NAME=${{ env.DOMAIN_NAME }} \

--build-arg RDS_ENDPOINT=${{ env.RDS_ENDPOINT }} \

--build-arg RDS_DB_NAME=${{ secrets.RDS_DB_NAME }} \

--build-arg RDS_DB_USERNAME=${{ secrets.RDS_DB_USERNAME }} \

--build-arg RDS_DB_PASSWORD=${{ secrets.RDS_DB_PASSWORD }} \

-t ${{ env.IMAGE_NAME }}:${{ env.IMAGE_TAG }} .

- name: Retag Docker image

env:

IMAGE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.image_name }}

run: |

docker tag ${{ env.IMAGE_NAME }} ${{ secrets.ECR_REGISTRY }}/${{ env.IMAGE_NAME }}

- name: Push Docker Image to Amazon ECR

env:

IMAGE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.image_name }}

run: |

docker push ${{ secrets.ECR_REGISTRY }}/${{ env.IMAGE_NAME }}

aws-actions/amazon-ecr-login -- Logs in the local Docker client to one or more Amazon ECR Private registries or an Amazon ECR Public registry.

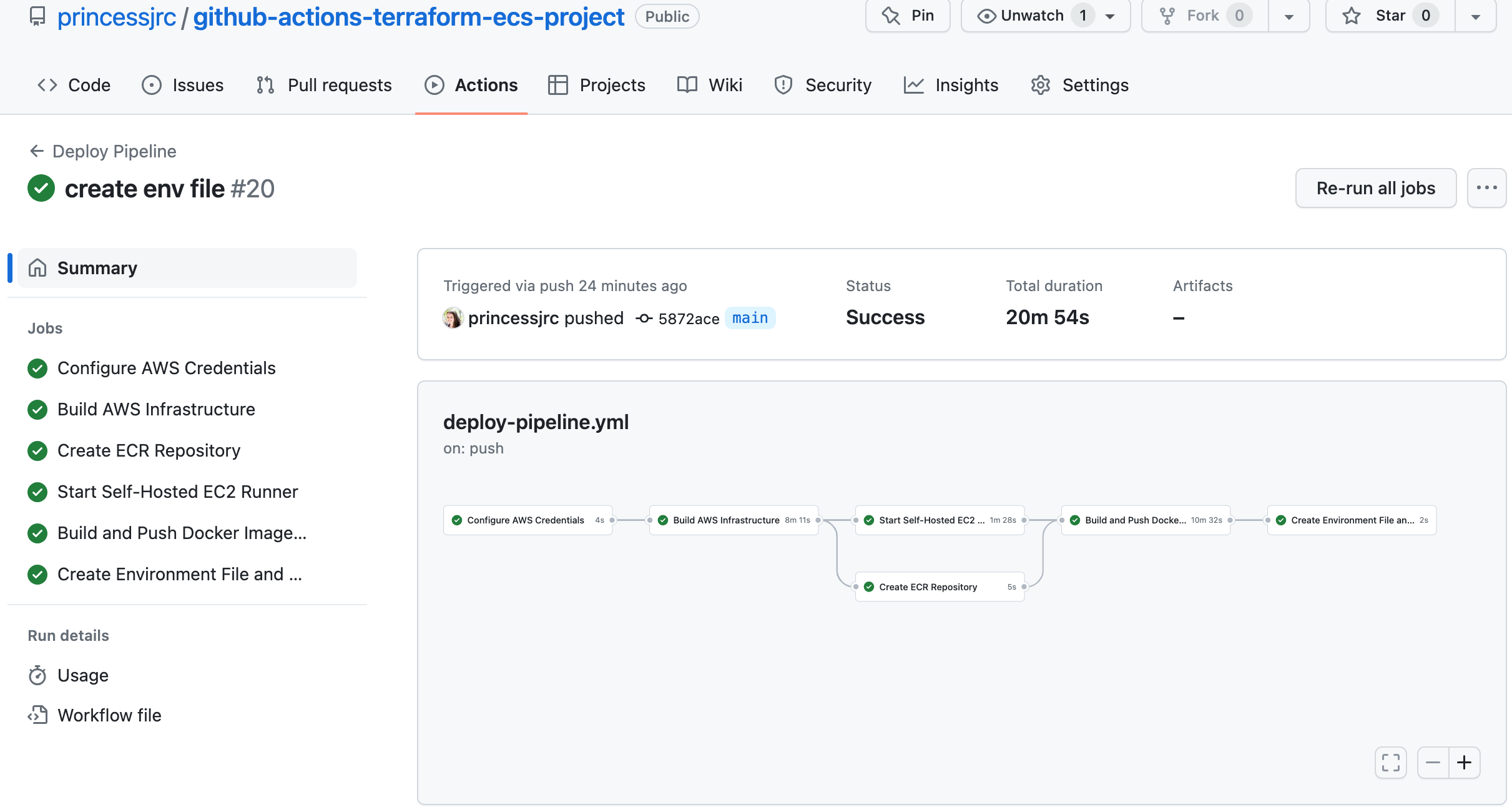

Step 13: Create a GitHub Actions Job to Export the Environment Variables into the S3 Bucket

We will now build a job that will store all the arguments we use to build the Docker image in a file. It will copy the file into an S3 bucket so that the ECS Fargate containers can reference the variables we stored in the file.

# Create environment file and export to S3

export_env_variables:

name: Create Environment File and Export to S3

needs:

- configure_aws_credentials

- deploy_aws_infrastructure

- start_runner

- build_and_push_image

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy'

runs-on: ubuntu-latest

steps:

- name: Export environment variable values to file

env:

DOMAIN_NAME: ${{ needs.deploy_aws_infrastructure.outputs.domain_name }}

RDS_ENDPOINT: ${{ needs.deploy_aws_infrastructure.outputs.rds_endpoint }}

ENVIRONMENT_FILE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.environment_file_name }}

run: |

echo "PERSONAL_ACCESS_TOKEN=${{ secrets.PERSONAL_ACCESS_TOKEN }}" > ${{ env.ENVIRONMENT_FILE_NAME }}

echo "GITHUB_USERNAME=${{ env.GITHUB_USERNAME }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "REPOSITORY_NAME=${{ env.REPOSITORY_NAME }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "WEB_FILE_ZIP=${{ env.WEB_FILE_ZIP }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "WEB_FILE_UNZIP=${{ env.WEB_FILE_UNZIP }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "DOMAIN_NAME=${{ env.DOMAIN_NAME }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "RDS_ENDPOINT=${{ env.RDS_ENDPOINT }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "RDS_DB_NAME=${{ secrets.RDS_DB_NAME }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "RDS_DB_USERNAME=${{ secrets.RDS_DB_USERNAME }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

echo "RDS_DB_PASSWORD=${{ secrets.RDS_DB_PASSWORD }}" >> ${{ env.ENVIRONMENT_FILE_NAME }}

- name: Upload environment file to S3

env:

ENVIRONMENT_FILE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.environment_file_name }}

ENV_FILE_BUCKET_NAME: ${{ needs.deploy_aws_infrastructure.outputs.env_file_bucket_name }}

run: aws s3 cp ${{ env.ENVIRONMENT_FILE_NAME }} s3://${{ env.ENV_FILE_BUCKET_NAME }}/${{ env.ENVIRONMENT_FILE_NAME }}

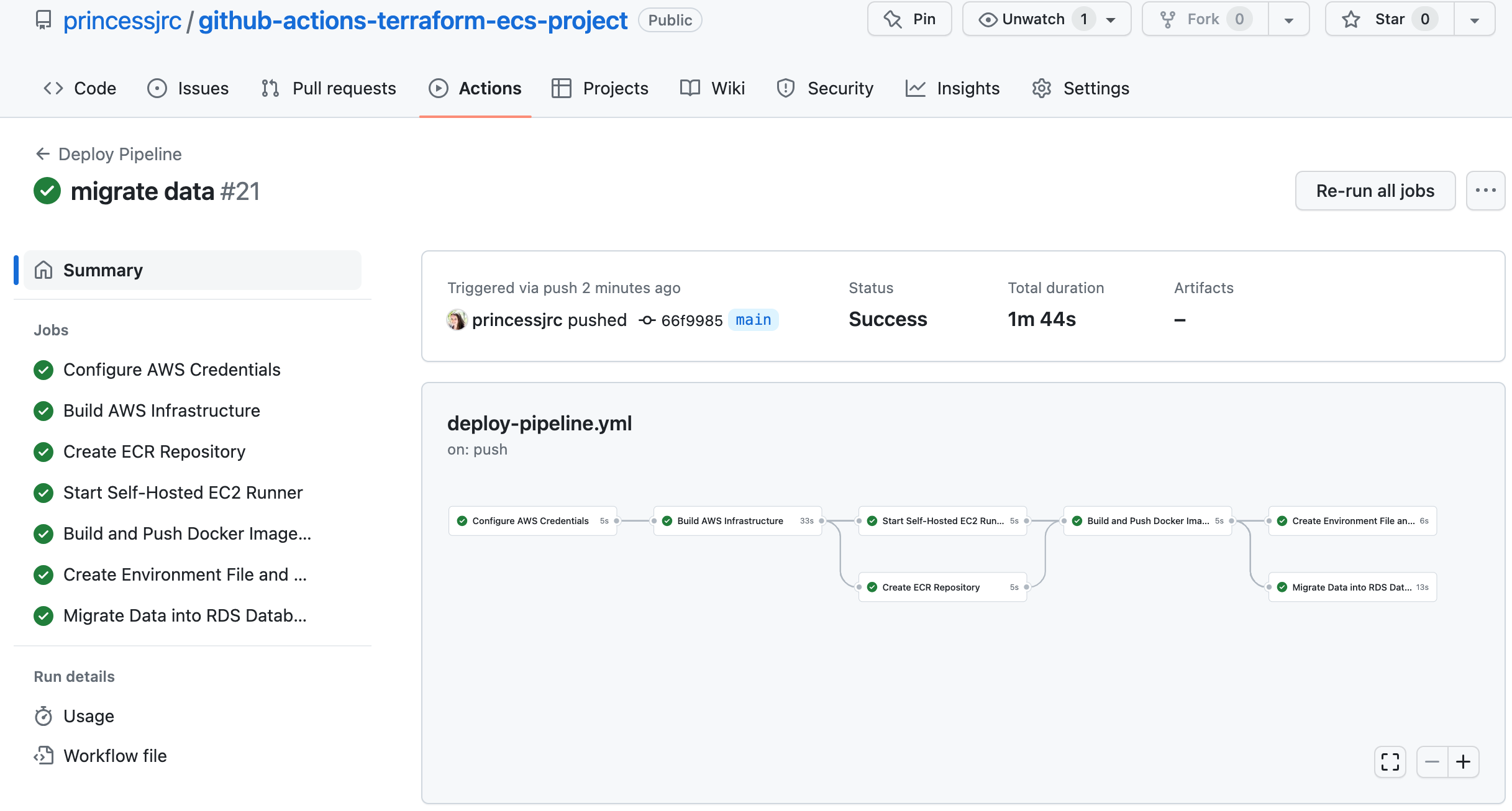

Step 14: Create a GitHub Actions Job to Migrate Data into the RDS Database with Flyway

In this job, we will use Flyway to migrate the SQL data for our application into the RDS database.1. Open our project folder in VS Code and create a new folder called 'sql'.

2. Add the SQL script we want to migrate into our to our RDS database to our new folder.

3. We will use Flyway to transfer the SQL data for our application into our RDS database. This involves setting up Flyway on our self-hosted runner and using it to move the data into our RDS database.

# Migrate data into RDS database with Flyway

migrate_data:

name: Migrate Data into RDS Database with Flyway

needs:

- deploy_aws_infrastructure

- start_runner

- build_and_push_image

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy'

runs-on: self-hosted

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Download Flyway

run: |

wget -qO- https://repo1.maven.org/maven2/org/flywaydb/flyway-commandline/${{ env.FLYWAY_VERSION }}/flyway-commandline-${{ env.FLYWAY_VERSION }}-linux-x64.tar.gz | tar xvz && sudo ln -s `pwd`/flyway-${{ env.FLYWAY_VERSION }}/flyway /usr/local/bin

- name: Remove the placeholder (sql) directory

run: |

rm -rf flyway-${{ env.FLYWAY_VERSION }}/sql/

- name: Copy the sql folder into the Flyway sub-directory

run: |

cp -r sql flyway-${{ env.FLYWAY_VERSION }}/

- name: Run Flyway migrate command

env:

FLYWAY_URL: jdbc:mysql://${{ needs.deploy_aws_infrastructure.outputs.rds_endpoint }}:3306/${{ secrets.RDS_DB_NAME }}

FLYWAY_USER: ${{ secrets.RDS_DB_USERNAME }}

FLYWAY_PASSWORD: ${{ secrets.RDS_DB_PASSWORD }}

FLYWAY_LOCATION: filesystem:sql

working-directory: ./flyway-${{ env.FLYWAY_VERSION }}

run: |

flyway -url=${{ env.FLYWAY_URL }} \

-user=${{ env.FLYWAY_USER }} \

-password=${{ env.FLYWAY_PASSWORD }} \

-locations=${{ env.FLYWAY_LOCATION }} migrate

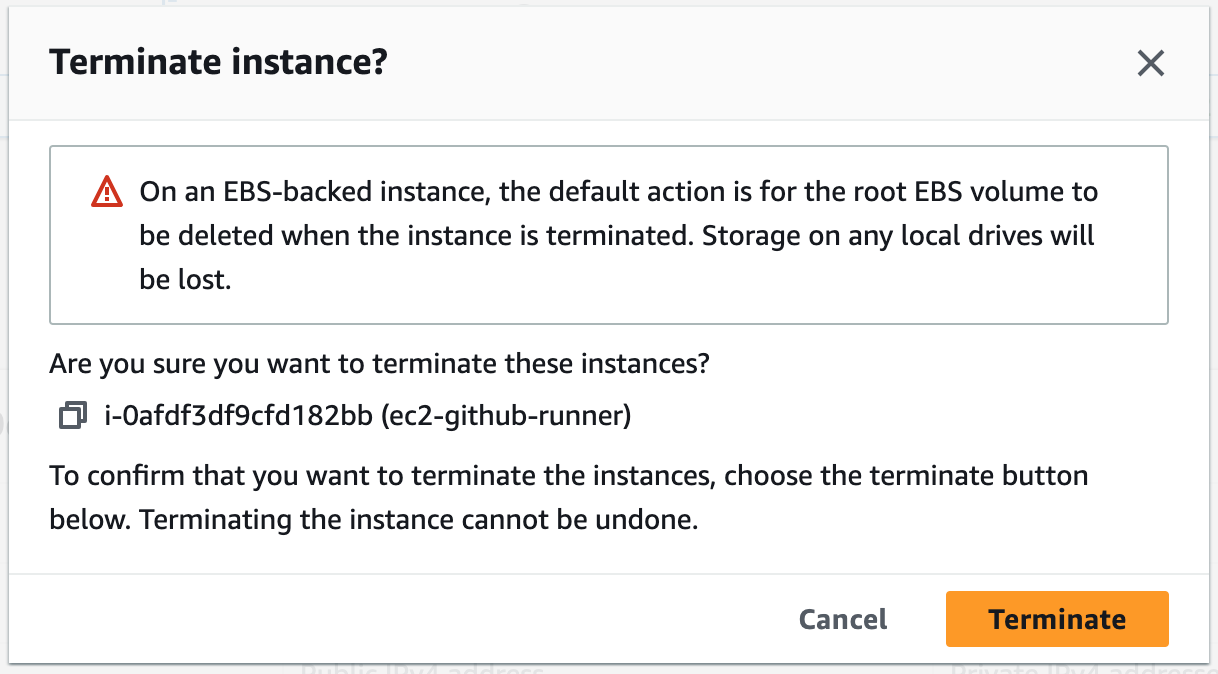

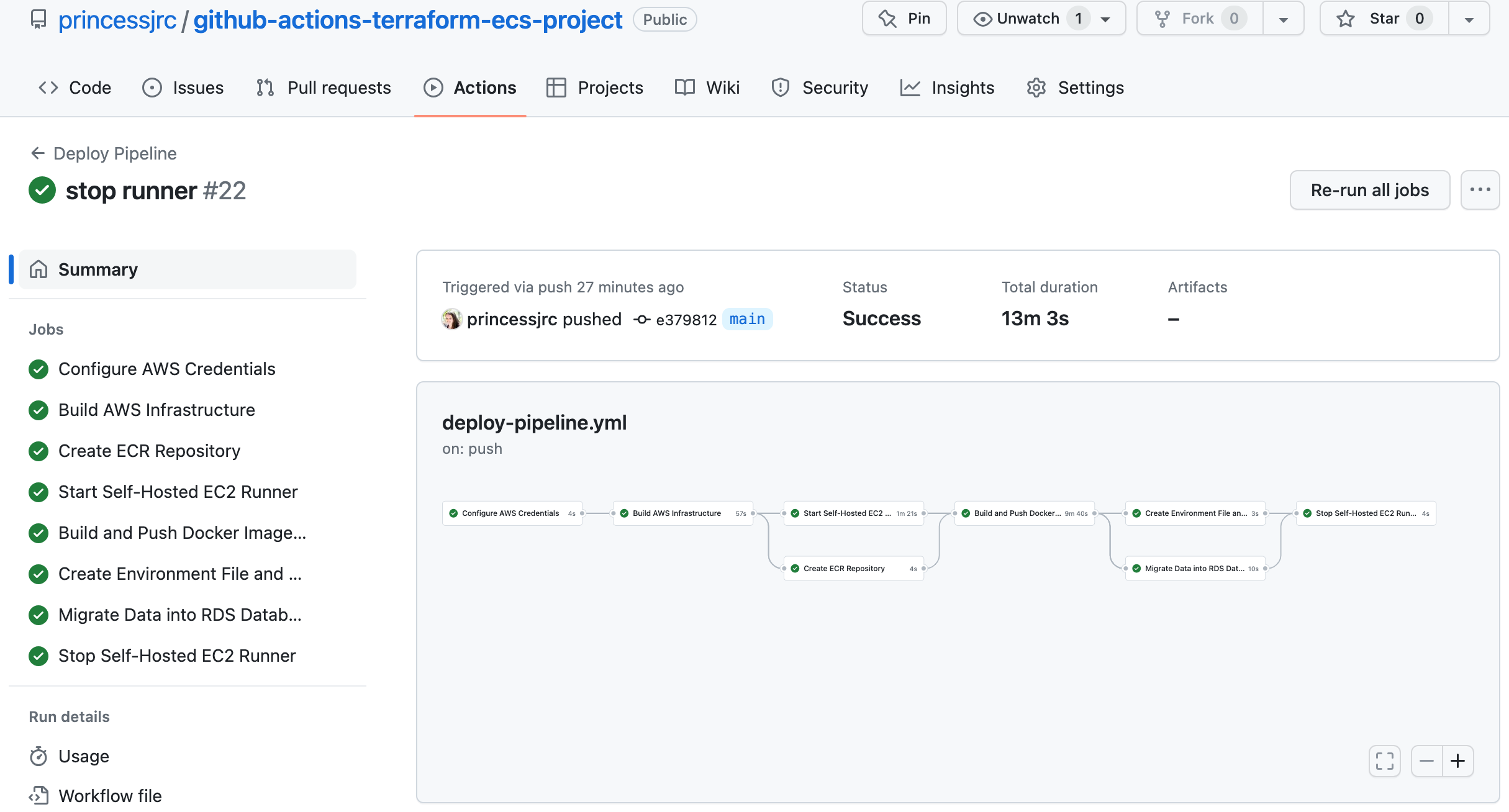

Step 15: Create a GitHub Actions Job to Stop the Self-Hosted Runner

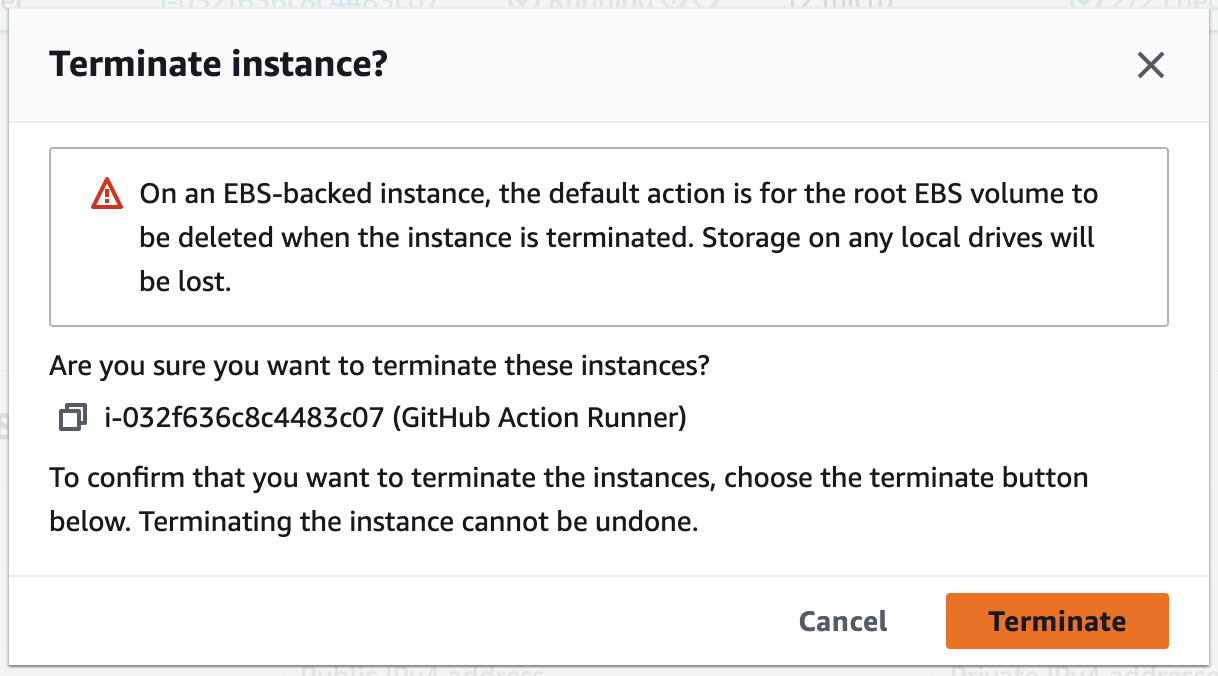

This job in our pipeline will be used to terminate the self-hosted runner. We will no longer need it once we have used it to build our Docker image and migrate the data for our application to the RDS database.1. Terminate the self-hosted runner currently running in the Management Console. The new self-hosted runner will be created when our pipeline runs, and the job we are about to create will terminate the runner once it has completed the tasks it was created for.

# Stop the self-hosted EC2 runner

stop_runner:

name: Stop Self-Hosted EC2 Runner

needs:

- configure_aws_credentials

- deploy_aws_infrastructure

- start_runner

- build_and_push_image

- export_env_variables

- migrate_data

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy' && always()

runs-on: ubuntu-latest

steps:

- name: Stop EC2 runner

uses: machulav/ec2-github-runner@v2

with:

mode: stop

github-token: ${{ secrets.PERSONAL_ACCESS_TOKEN }}

label: ${{ needs.start_runner.outputs.label }}

ec2-instance-id: ${{ needs.start_runner.outputs.ec2-instance-id }}

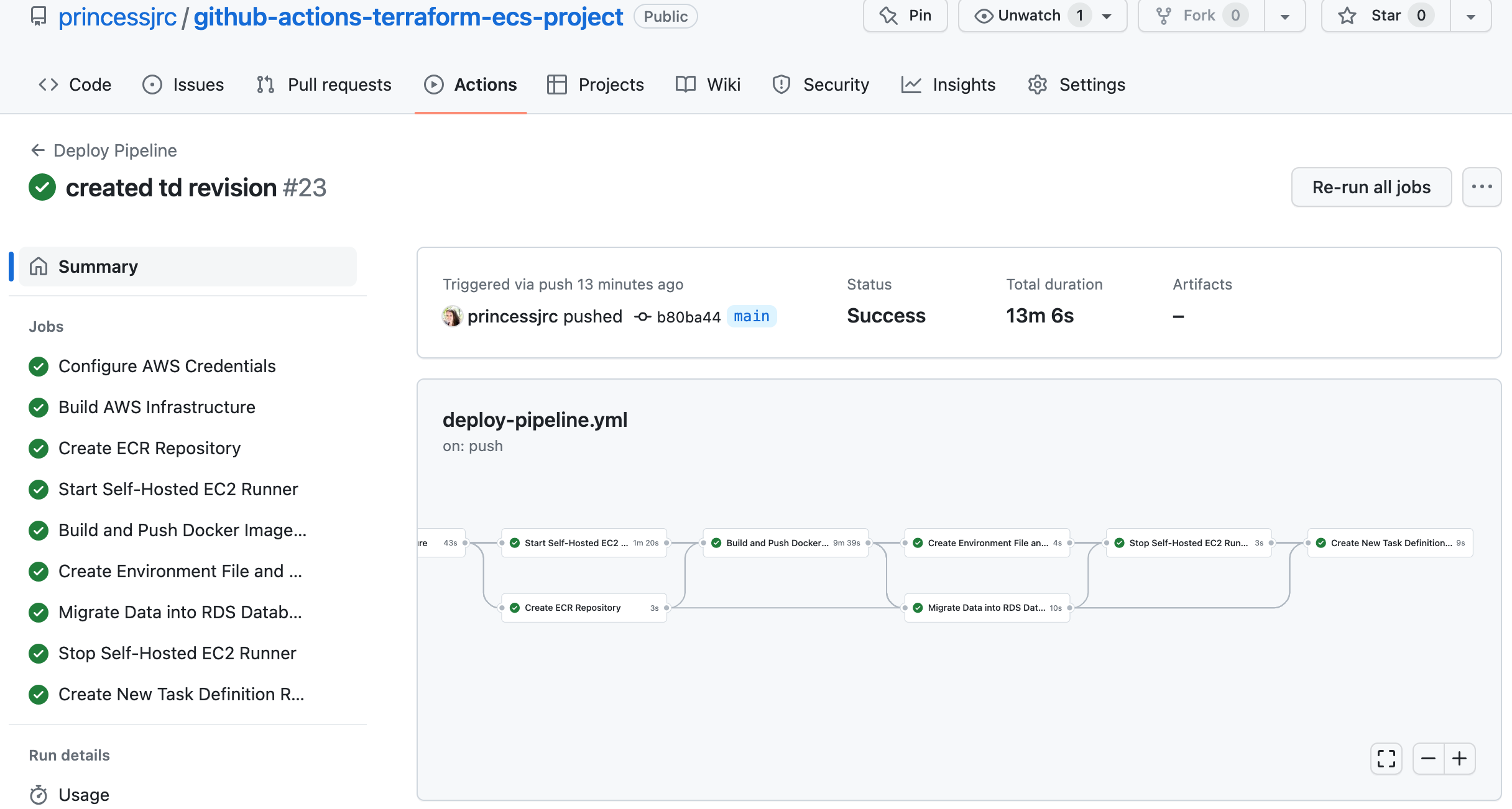

Step 16: Create a GitHub Actions Job to Create a New ECS Task Definition Revision

In this job, we will update the task definition for the ECS service hosting our application with the new image we pushed to Amazon ECR.

# Create new task definition revision

create_td_revision:

name: Create New Task Definition Revision

needs:

- configure_aws_credentials

- deploy_aws_infrastructure

- create_ecr_repository

- start_runner

- build_and_push_image

- export_env_variables

- migrate_data

- stop_runner

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy'

runs-on: ubuntu-latest

steps:

- name: Create new task definition revision

env:

ECS_FAMILY: ${{ needs.deploy_aws_infrastructure.outputs.task_definition_name }}

ECS_IMAGE: ${{ secrets.ECR_REGISTRY }}/${{ needs.deploy_aws_infrastructure.outputs.image_name }}:${{ needs.deploy_aws_infrastructure.outputs.image_tag }}

run: |

# Get existing task definition

TASK_DEFINITION=$(aws ecs describe-task-definition --task-definition ${{ env.ECS_FAMILY }})

# update the existing task definition by performing the following actions:

# 1. Update the `containerDefinitions[0].image` to the new image we want to deploy

# 2. Remove fields from the task definition that are not compatibile with `register-task-definition` --cli-input-json

NEW_TASK_DEFINITION=$(echo "$TASK_DEFINITION" | jq --arg IMAGE "${{ env.ECS_IMAGE }}" '.taskDefinition | .containerDefinitions[0].image = $IMAGE | del(.taskDefinitionArn) | del(.revision) | del(.status) | del(.requiresAttributes) | del(.compatibilities) | del(.registeredAt) | del(.registeredBy)')

# Register the new task definition and capture the output as JSON

NEW_TASK_INFO=$(aws ecs register-task-definition --cli-input-json "$NEW_TASK_DEFINITION")

# Grab the new revision from the output

NEW_TD_REVISION=$(echo "$NEW_TASK_INFO" | jq '.taskDefinition.revision')

# Set the new revision as an environment variable

echo "NEW_TD_REVISION=$NEW_TD_REVISION" >> $GITHUB_ENV

outputs:

new_td_revision: ${{ env.NEW_TD_REVISION }}

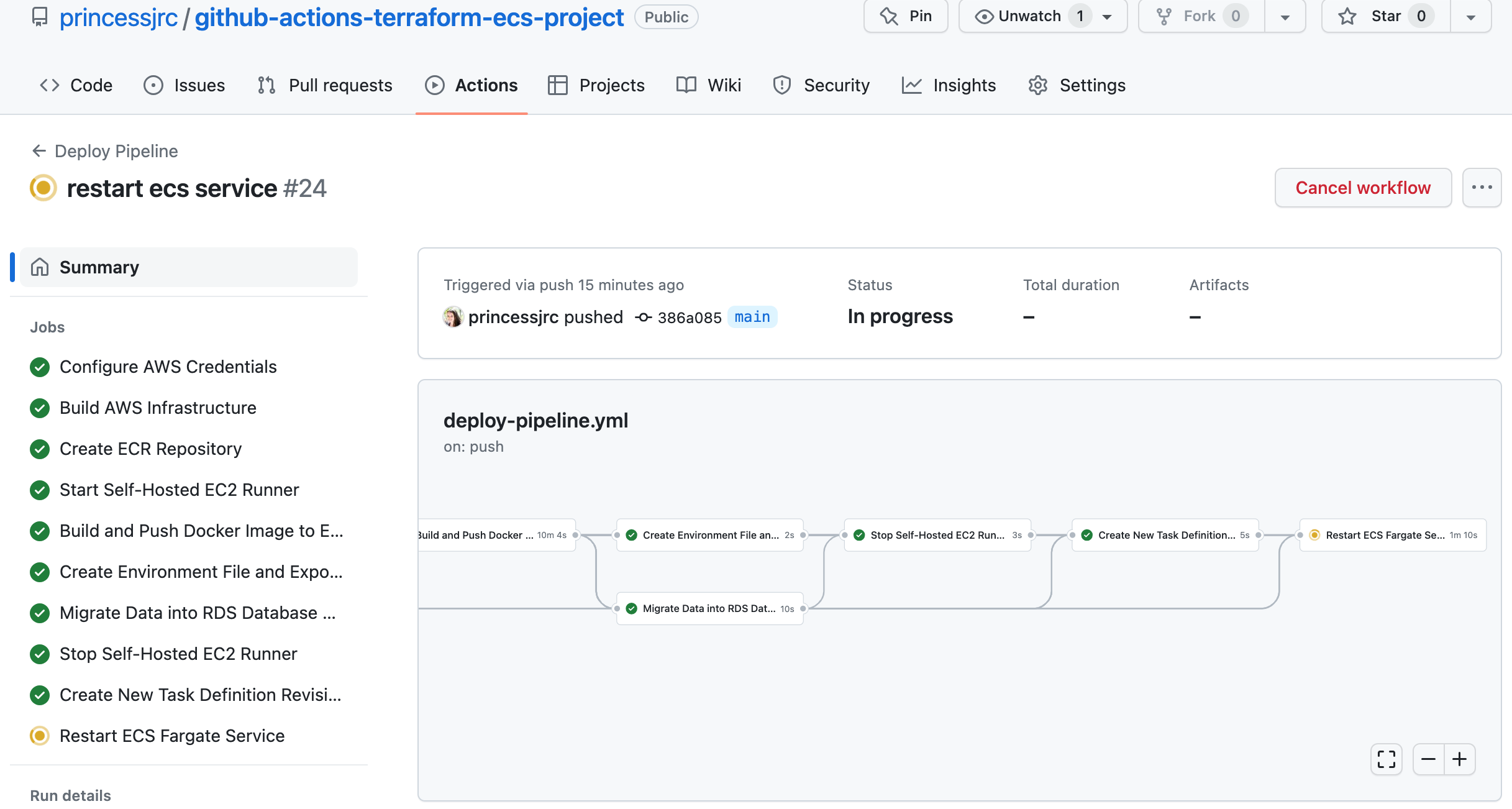

Step 17: Create a GitHub Actions Job to Restart the ECS Fargate Service

Finally, we will restart the ECS service and force it to use the latest task definition revision we just created.

# Restart ECS Fargate service

restart_ecs_service:

name: Restart ECS Fargate Service

needs:

- configure_aws_credentials

- deploy_aws_infrastructure

- create_ecr_repository

- start_runner

- build_and_push_image

- export_env_variables

- migrate_data

- stop_runner

- create_td_revision

if: needs.deploy_aws_infrastructure.outputs.terraform_action != 'destroy'

runs-on: ubuntu-latest

steps:

- name: Update ECS Service

env:

ECS_CLUSTER_NAME: ${{ needs.deploy_aws_infrastructure.outputs.ecs_cluster_name }}

ECS_SERVICE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.ecs_service_name }}

TD_NAME: ${{ needs.deploy_aws_infrastructure.outputs.task_definition_name }}

run: |

aws ecs update-service --cluster ${{ env.ECS_CLUSTER_NAME }} --service ${{ env.ECS_SERVICE_NAME }} --task-definition ${{ env.TD_NAME }}:${{ needs.create_td_revision.outputs.new_td_revision }} --force-new-deployment

- name: Wait for ECS service to become stable

env:

ECS_CLUSTER_NAME: ${{ needs.deploy_aws_infrastructure.outputs.ecs_cluster_name }}

ECS_SERVICE_NAME: ${{ needs.deploy_aws_infrastructure.outputs.ecs_service_name }}

run: |

aws ecs wait services-stable --cluster ${{ env.ECS_CLUSTER_NAME }} --services ${{ env.ECS_SERVICE_NAME }}